It's the unlikely story of a 25-year-old computer resurrected in the era of artificial intelligence. A group of researchers has succeeded in running a language model inspired by LLaMA 2 on a PC equipped with a 350 MHz Pentium II processor and 128 MB of RAM. The operating system? Windows 98, of course.

Back to basics

To meet this challenge, the team relied on an architecture called BitNet. Unlike traditional models that require several tens of gigabytes of memory and a state-of-the-art graphics card, BitNet is based on ternary weights (0, -1, 1), which drastically reduces the size of the model. Result: a model with 7 billion parameters fits into 1.38 GB of storage.

The model used here, called stories260K, obviously doesn't have the power of ChatGPT, but it is capable of generating text at a rate of around 39 tokens per second. A modest rate, but enough to demonstrate that AI can adapt to older consumer hardware.

This retro-tech project required much more than a simple download. To make it work, the team had to deal with the limitations of a vintage PC. No modern USB keyboard or USB drive could be used: back to PS/2 devices and good old FTP to transfer files!

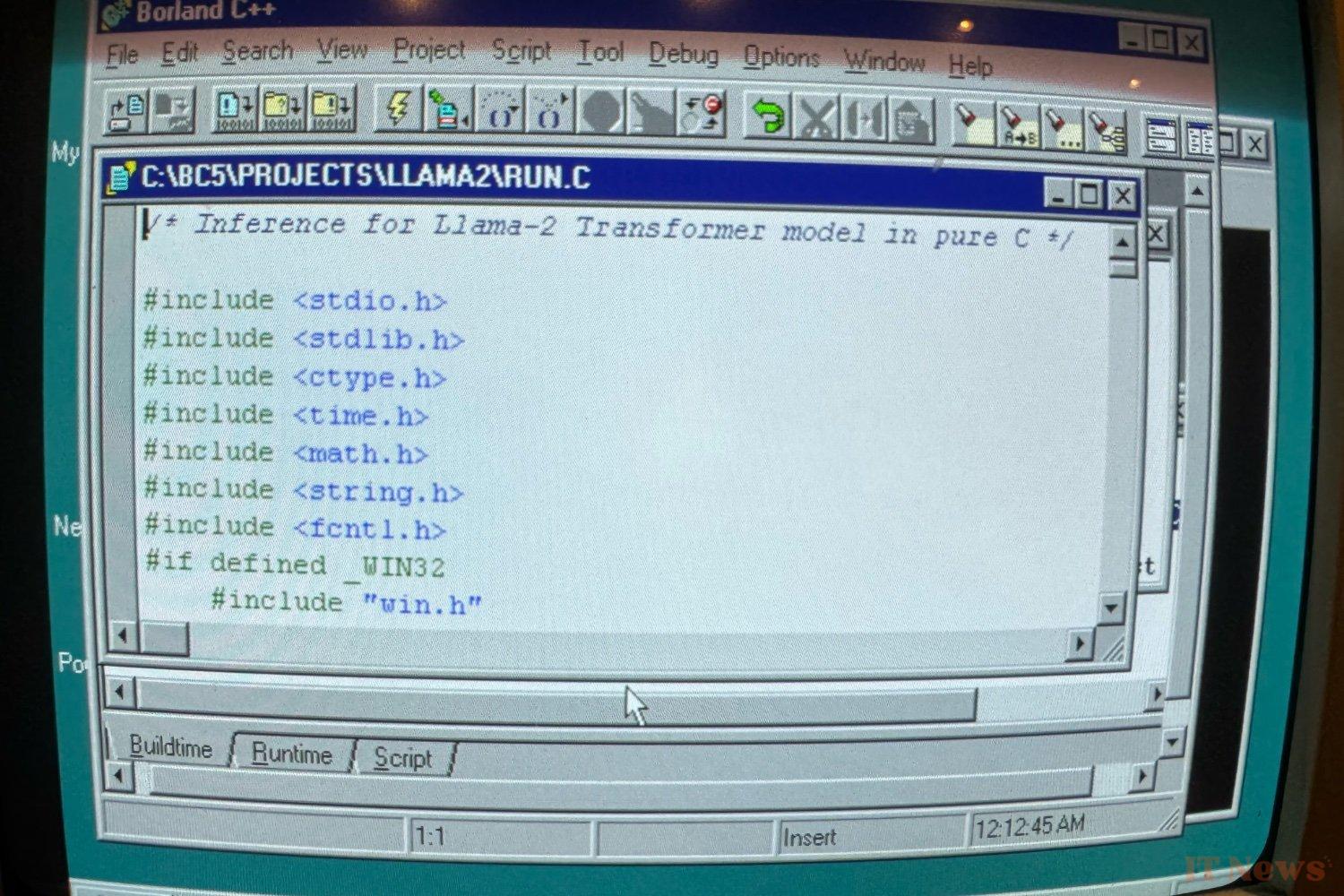

Compiling the code was also a bit of an archaeological exploration. Exit modern compilers: Borland C++ 5.02, released in 1998, was chosen to adapt the llama2.c file, a minimalist C code. Some adjustments were necessary, such as replacing modern types or manually managing system clocks.

The team sums up their achievement with humor: "If it runs on a 1998 PC, then it can run anywhere."

Beyond the technical feat, the project has a broader ambition: to make artificial intelligence more accessible. Today, the majority of AI models run on remote servers in data centers. This is an expensive, energy-intensive solution that relies on large cloud platforms.

EXO offers an alternative: running models locally, directly on users' hardware, even modest ones. BitNet is part of this logic with its ultra-compact approach. EXO claims that, using this method, a model with 100 billion parameters could theoretically run on a single CPU, at a speed close to human reading (5 to 7 tokens/second).

This approach opens up unexpected possibilities: integrating AI into old phones, forgotten computers, or even embedded devices without a permanent connection to the cloud.

What's next? EXO promises open-source tools for those who want to try it on other old machines, and is working on integrating ternary models into specialized fields, such as protein modeling.

0 Comments