On paper, Apple Intelligence is a dream come true. The suite of tools developed by Apple promises to revolutionize the use of the iPhone with features like writing tools, image correctors, notification summaries, and even the integration of ChatGPT. The Cupertino giant certainly took a while to launch its AI, and even longer to present it to Europe, but this time it's for real: Apple Intelligence should arrive on our iPhones and iPads in April.

That's why we didn't hesitate long before installing the iOS 18.4 update on our iPhone 16 Pro Max. After a few days of use, we quickly became disillusioned. We'll explain why in detail.

Of course, this Apple Intelligence test was conducted on the beta version of Apple's OS. This cannot be considered a definitive opinion of Cupertino's AI, as the final version can still improve the user experience. However, it is rare for an update to change its content radically a month before its final launch, which is why we decided to publish this first opinion.

Writing assistance: when Apple's AI lacks finesse

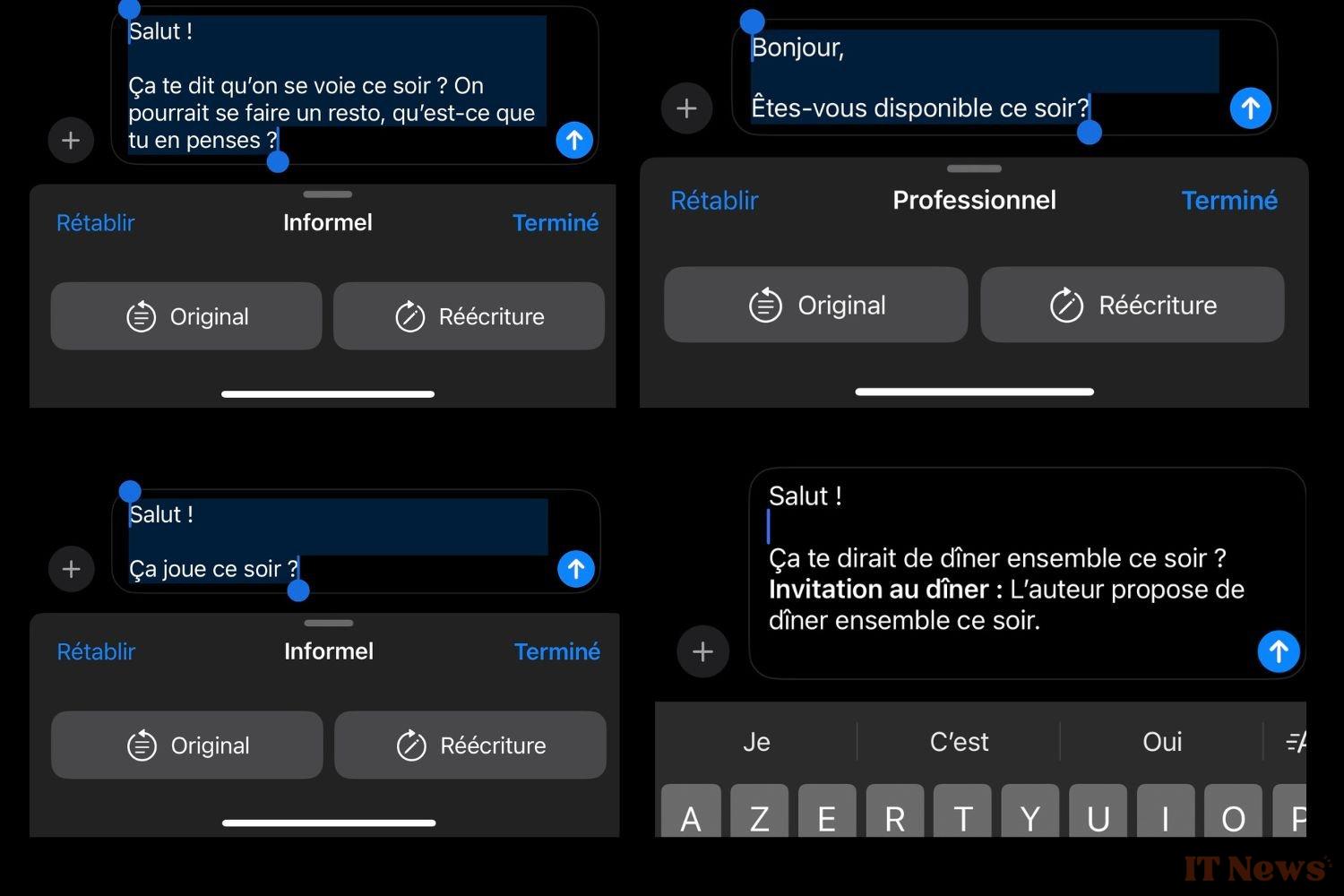

Once we installed the iOS beta, we rushed to test Apple Intelligence's writing assistance tools. These allow you to rework a text with the help of generative AI. The function appears automatically via a context menu as soon as you write something, whether in messages, emails, or another application.

In theory, the tool allows you to rely on AI to design messages and texts calibrated according to your needs. In practice, we often had the impression that the AI provided by Apple lacks finesse and understanding of the French language. We are far from the results obtained with ChatGPT, Grok, or even Claude. When we "convert" the message sent to a loved one, we are rarely impressed by the result. In fact, we would often achieve a better result just by thinking a little. The options offered by Apple, such as concise, professional, or informal rephrasing, remain amusing, but they are not really useful. In general, we ask AI to write a message for us, rather than rewrite it.

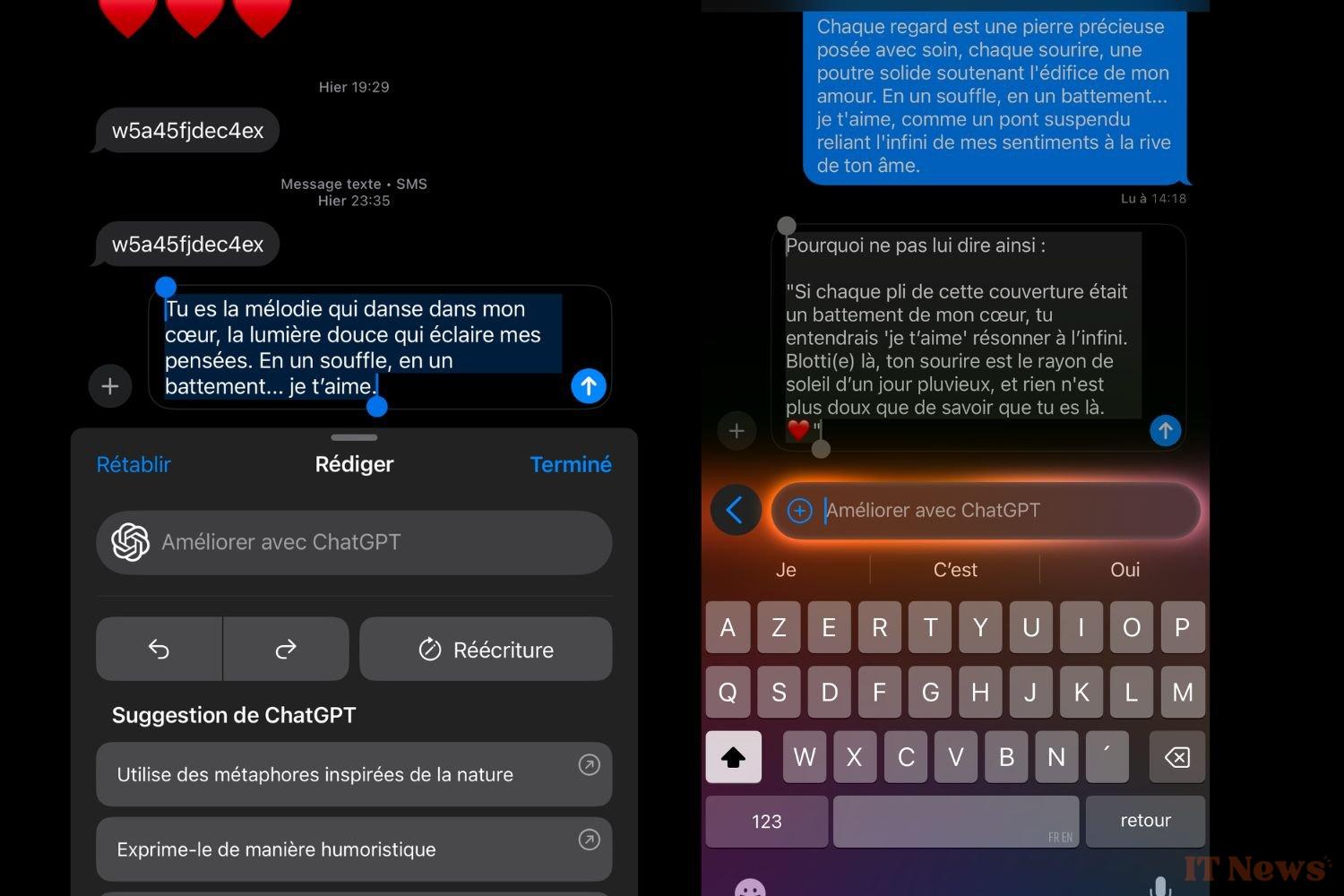

For more convincing results, it is essential to call on ChatGPT, now integrated OS-wide, directly into the writing tools. When it comes to writing, Apple's AI model, which runs locally, is not up to the task. Using ChatGPT obviously results in more accurate and relevant content.

Despite everything, we haven't gotten into the habit of using Apple Intelligence in our daily activities, whether for writing texts or emails. However, we regularly use AI to review text or obtain summaries while working on our iPhone. The lack of precision of Apple's AI prevented us from integrating it into our routine. After two weeks of use, we only use the writing tools occasionally.

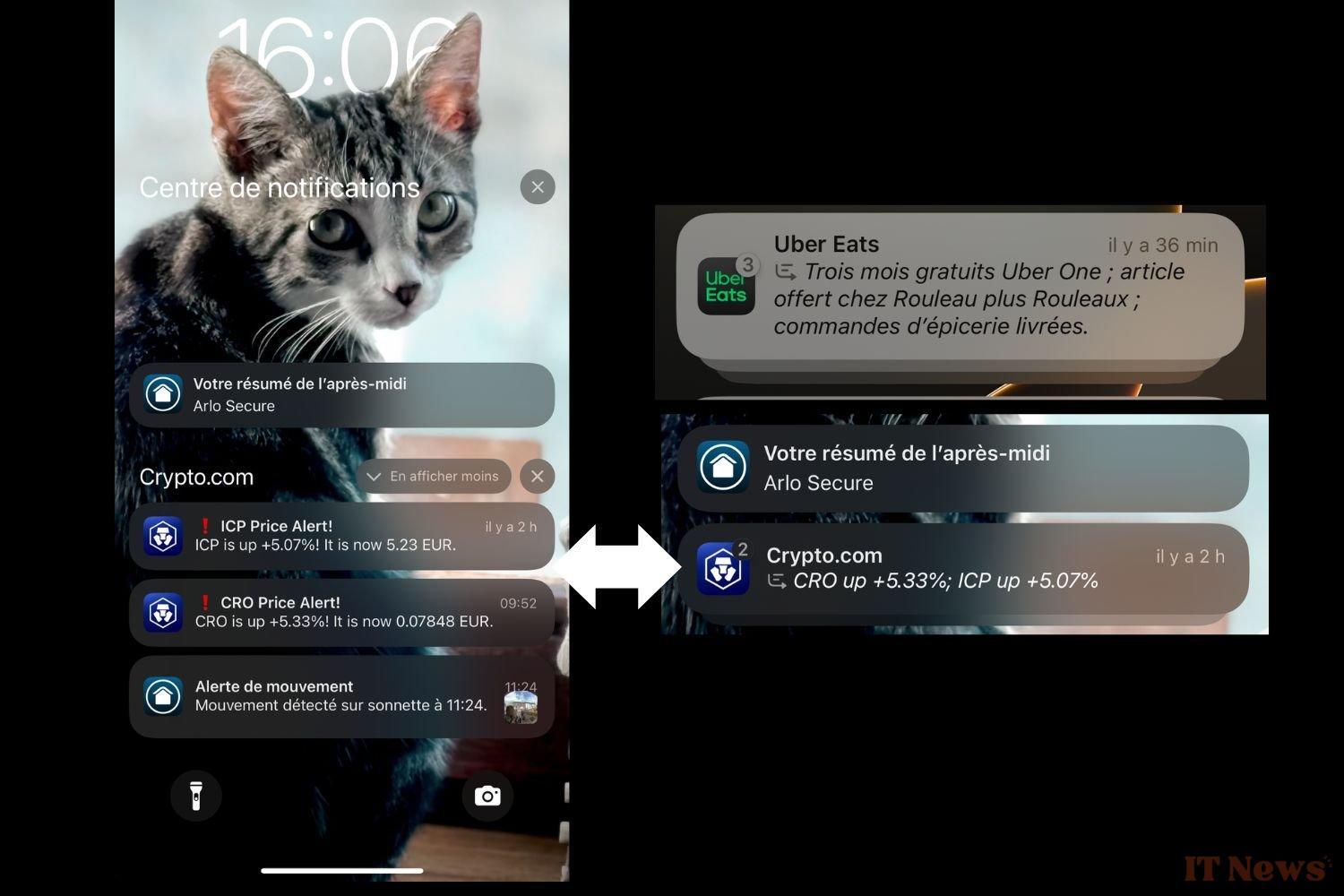

Imperfect summaries

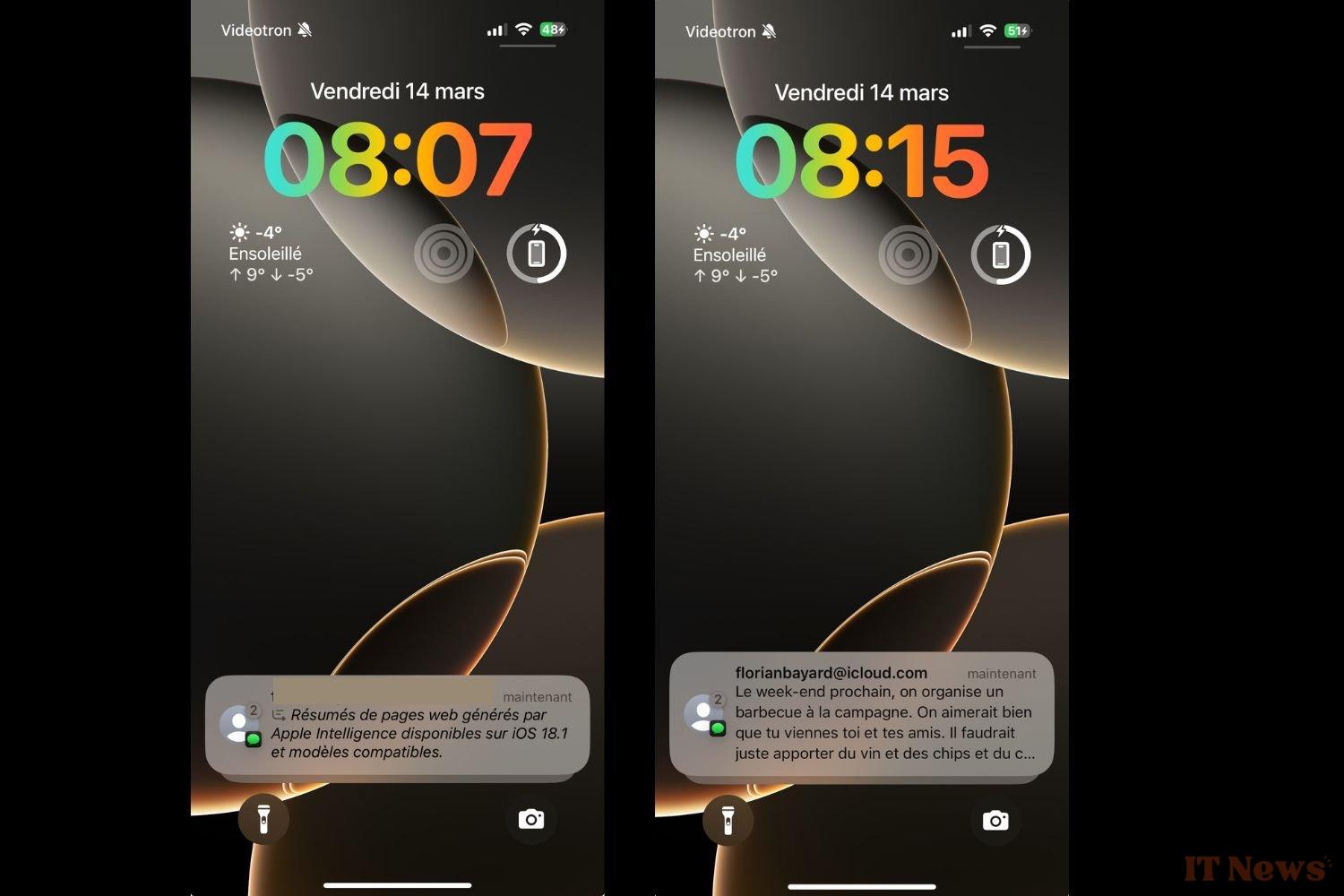

The notification summary particularly disappointed us. The feature should automatically offer summaries of notifications that appear on your iPhone. It's primarily designed to summarize long news notifications into a short text that will save you time. Unfortunately, Apple was forced to suspend the news feature following numerous user-reported glitches. Apple Intelligence began spreading misinformation by incorrectly summarizing content. Some apps are now excluded from the program.

Furthermore, the language model chosen by Apple, which runs locally directly on the iPhone, has often proven incapable of providing a relevant summary. Often, you have to click on the notification to fully understand what it is about. The meaning of the summary is sometimes very different from that of the original message.

Most often, the function is simply inactive. We continued to receive our notifications the same way as before. In fact, Apple Intelligence summarizes one out of two text messages, completely randomly. Sometimes, the AI summarizes notifications sent by a crypto platform, concerning the price of digital currencies, into a single message. In these cases, the option works, but it's hard to find a real benefit. The summary too often lacks readability. Above all, Apple Intelligence doesn't act regularly enough to have a real impact on the way we interact with our notifications. On this point again, Apple has remained a little too timid.

The same goes for the new focus mode, which relies on Apple Intelligence. While it proves quite effective in blocking unwanted notifications, it somewhat duplicates the focus modes we've already configured. If you already have several modes calibrated to your activities, you won't notice much difference by enabling Apple Intelligence.

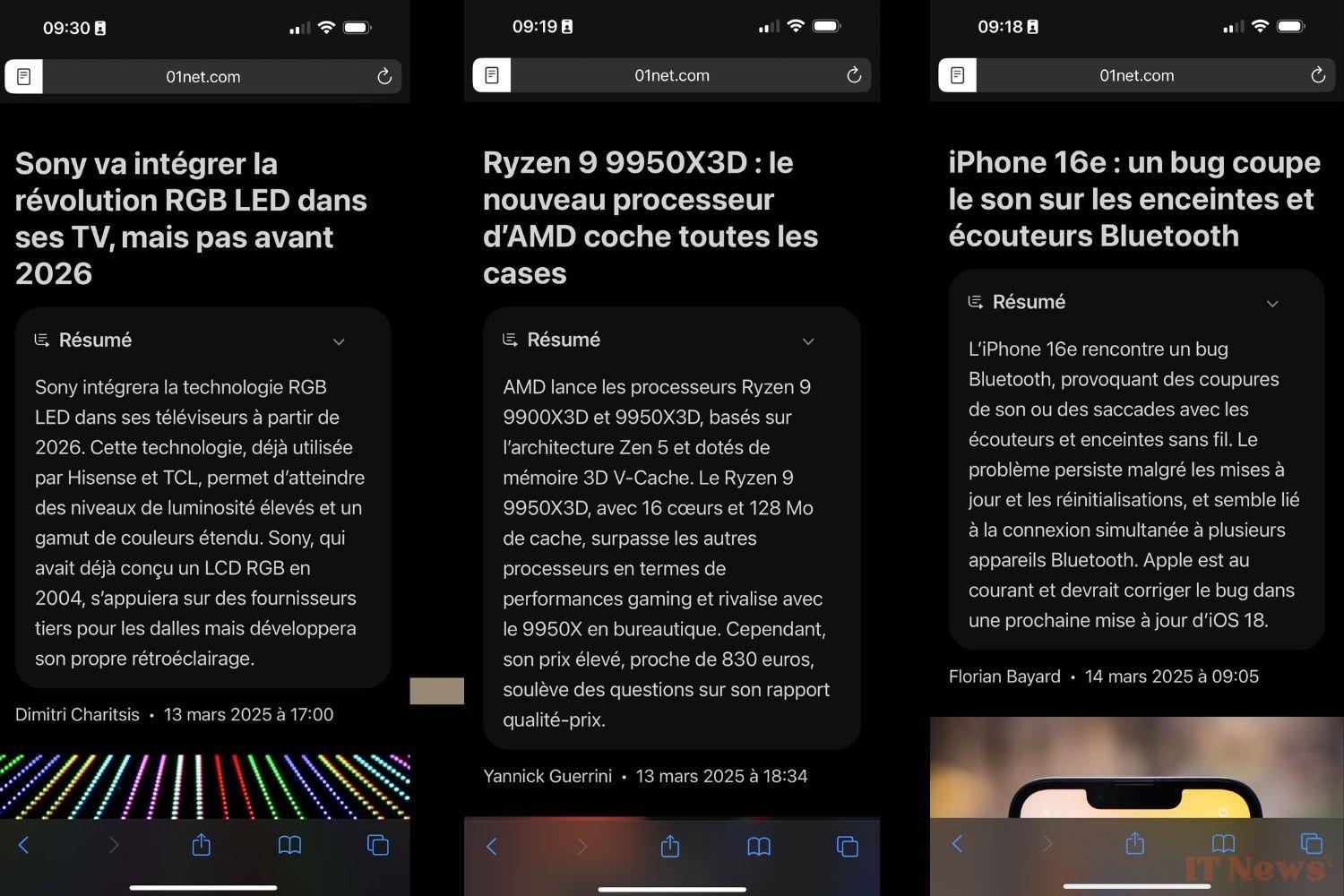

We also tested the article summaries on Safari. We tried the feature with a host of articles found online. In most cases, Apple's AI works as expected and allows for a succinct summary of long content. On longer articles, the AI misses the point a bit, and sometimes focuses on anecdotal elements.

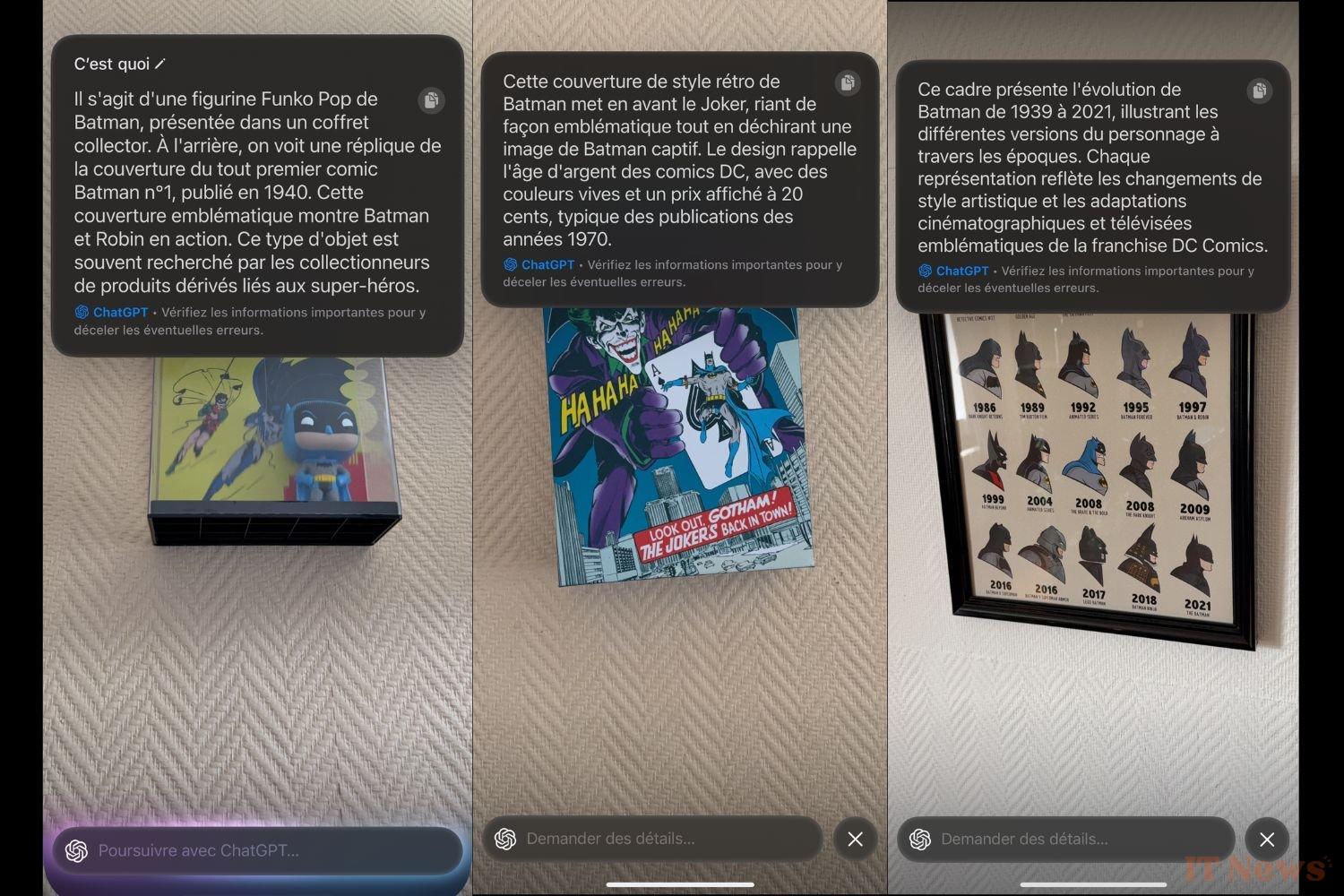

Visual Intelligence, a good surprise

Visual Intelligence is one of the good surprises of Apple Intelligence. Through the camera, it is possible to ask the AI to provide you with information about what is in front of you. You can activate this mode by long-pressing the new iPhone 16's body.

The function relies directly on ChatGPT. As soon as you arrive in the Apple Intelligence interface, a conversation window invites you to converse with ChatGPT. OpenAI's AI will then interpret the image and describe what is in front of you. In this respect, ChatGPT is very effective. It quickly provides brief explanations of what it sees. ChatGPT has proven difficult to trick, although it can be a bit lacking in precision. In the past, ChatGPT has often been used to understand how an unfamiliar machine, such as a boiler or a hot tub, works. Integrated into the iPhone camera, it becomes a true everyday assistant, easy to summon with a single gesture.

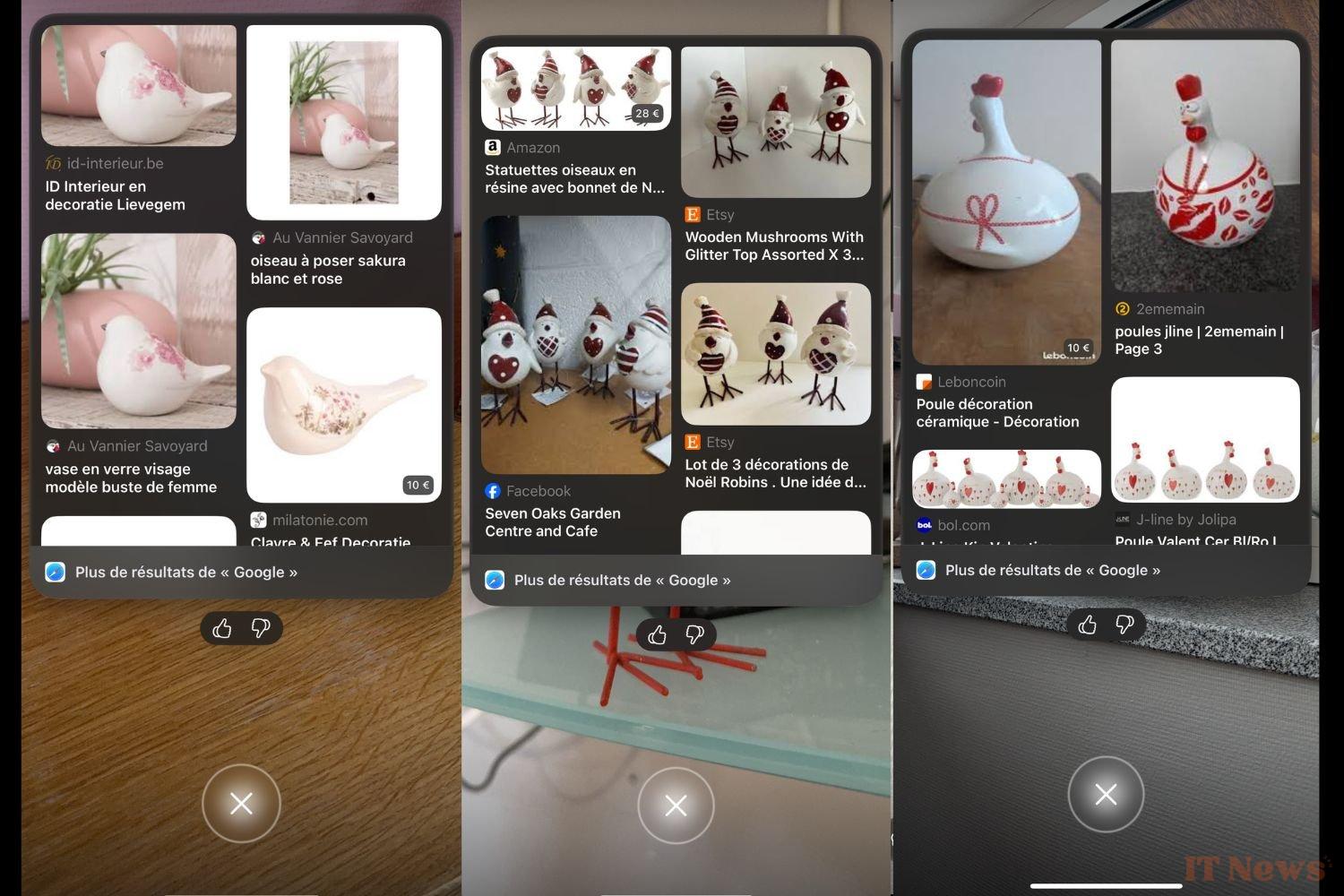

Furthermore, Visual Intelligence has a tool similar to Google Lens. This function allows you to search online for objects similar to the one in front of you. It's far from revolutionary. However, we appreciate that this feature is making its way to iOS.

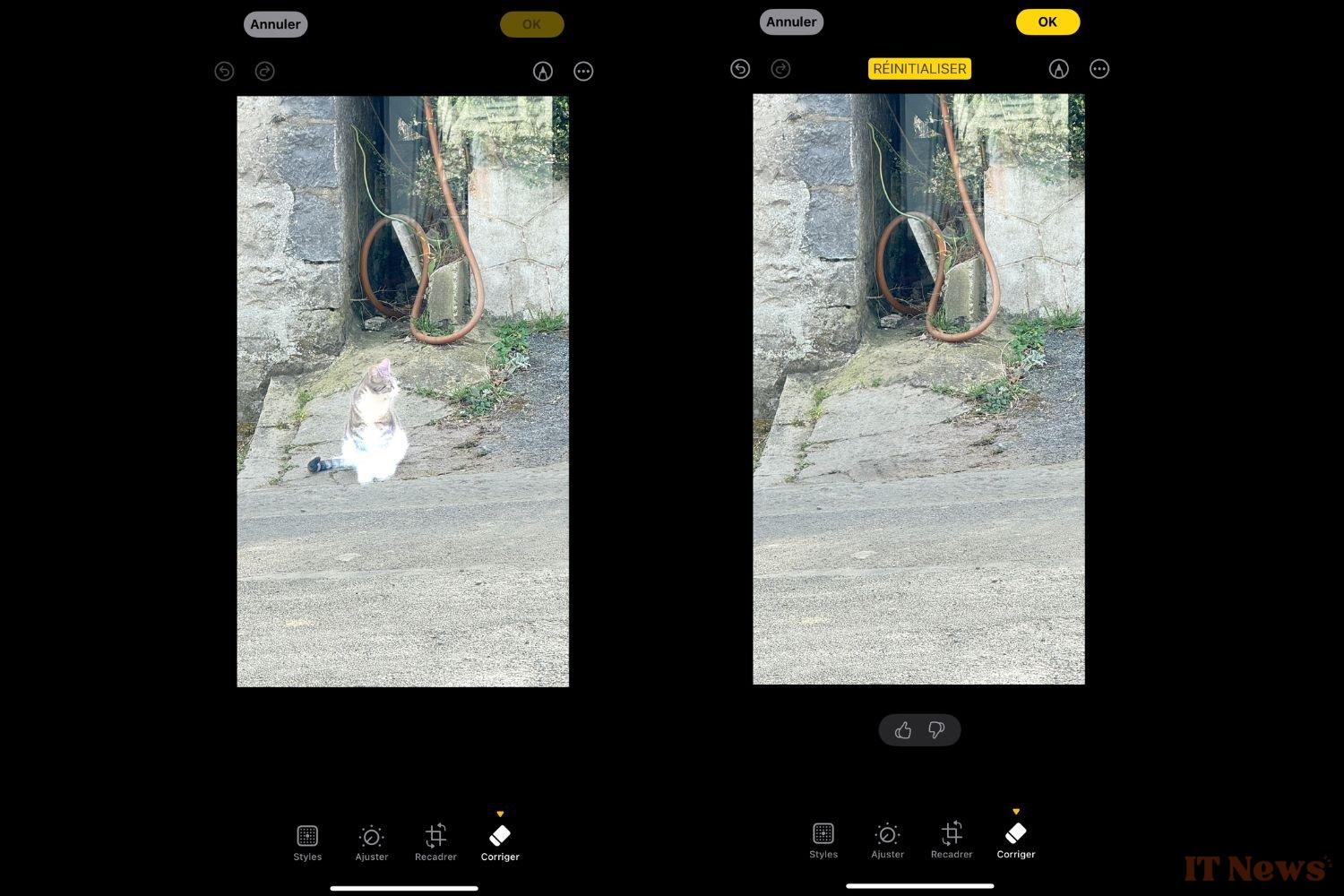

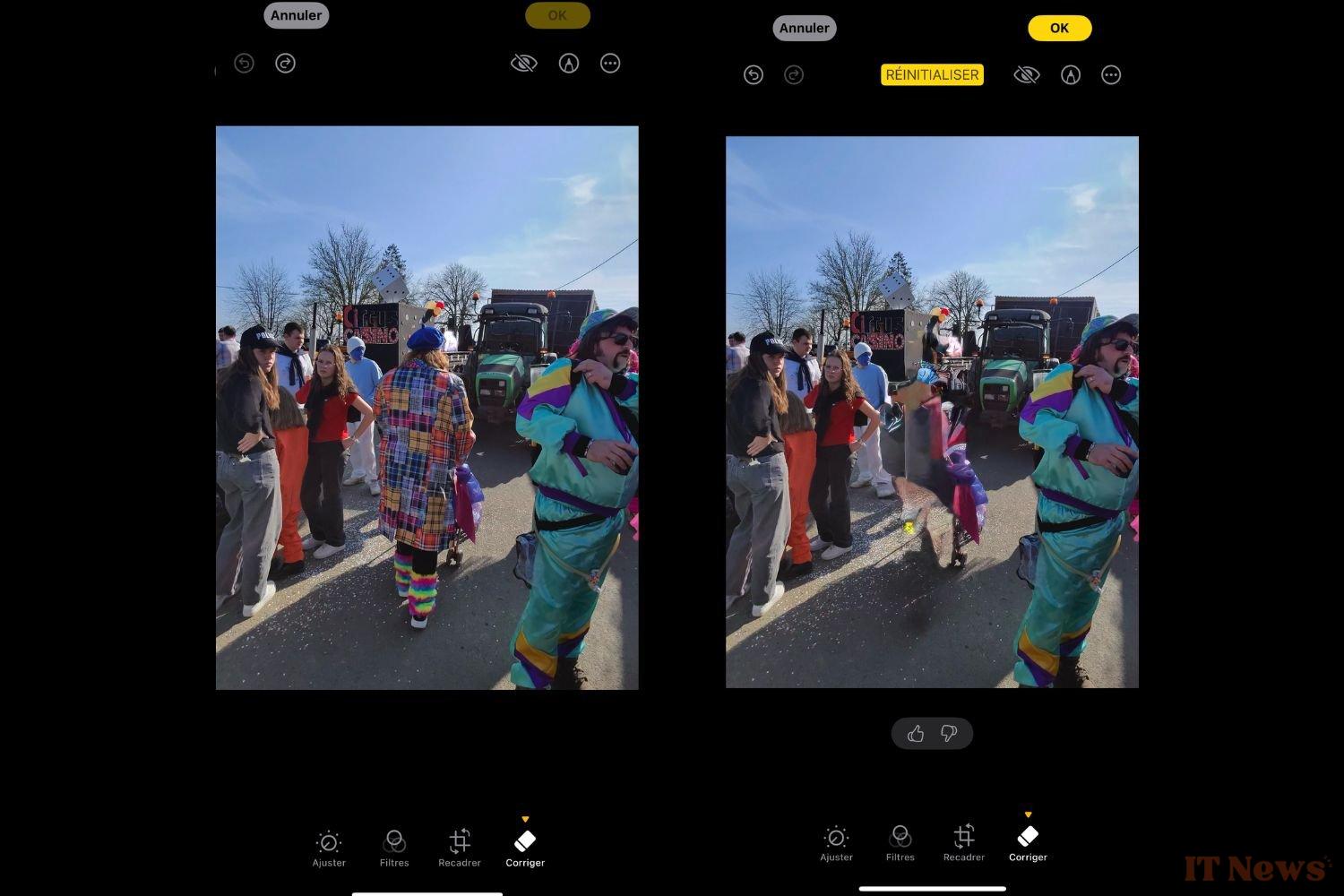

The Eraser, Genmoji, and PlayGround

Also worth mentioning is the Fix feature added to the Photos app. This allows you to remove an element from an image with a single click. The results are rather random. Typically, the eraser stroke leaves visible marks on the image. Don't expect to get rid of a tractor driving behind that ruins your vacation photo. The eraser only really works well with small items.

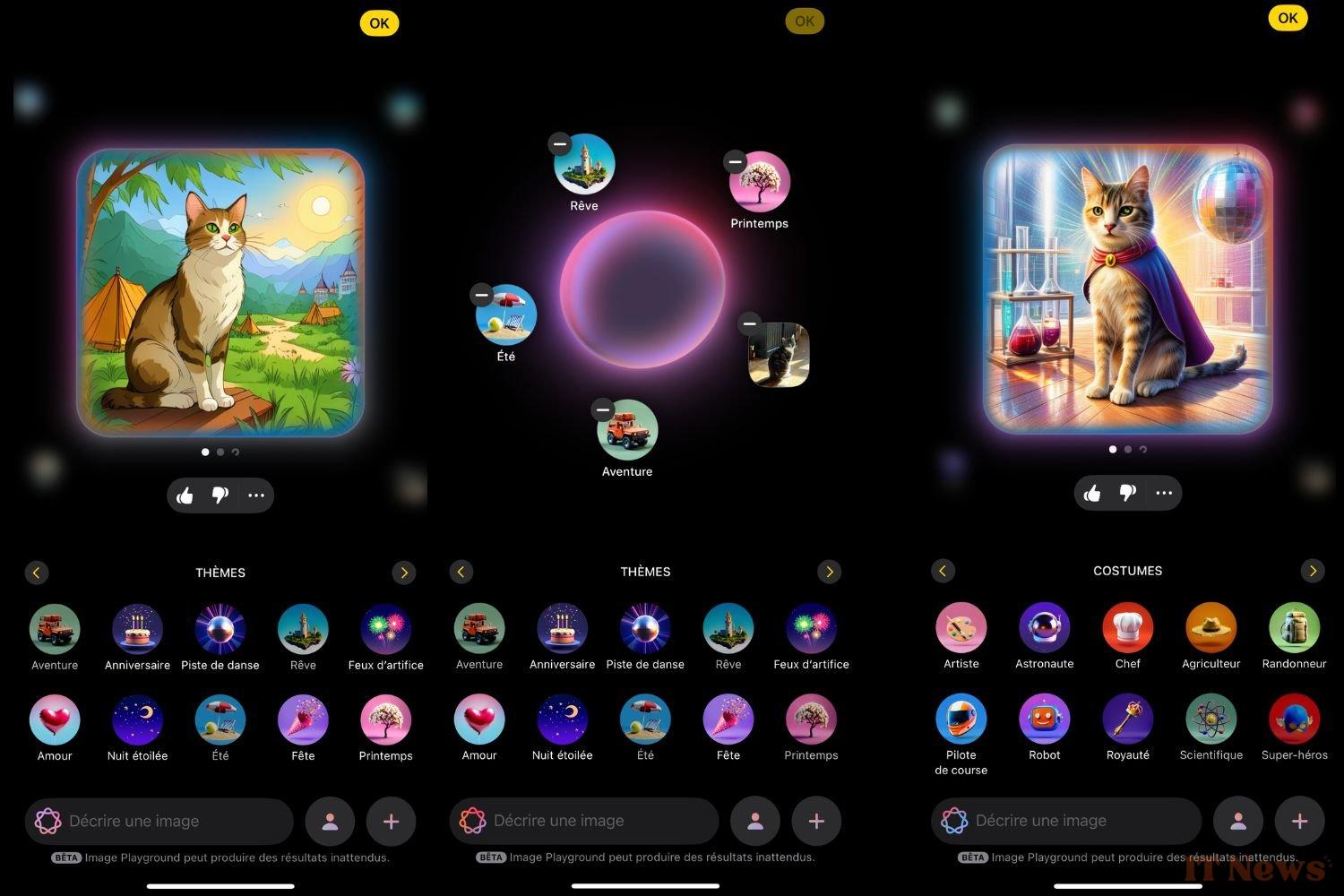

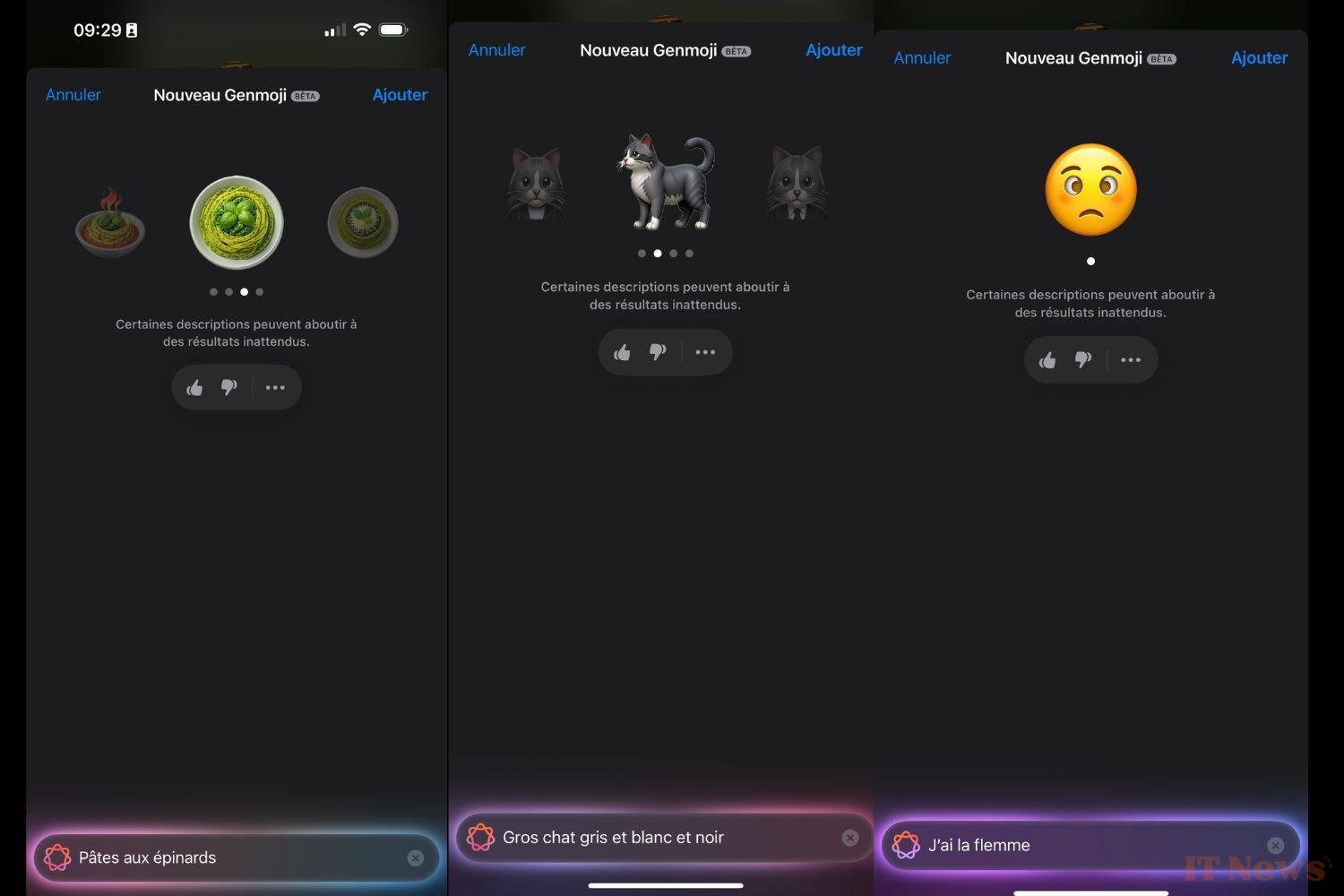

We also had a little fun with the Genmoji and Playground features. In short, they're an emoji generator and an image generator. As expected, they're mostly gadgets, in line with the animojis announced with the iPhone X and Face ID facial recognition.

The tools work pretty well, and the generated images are generally successful, although simplistic. They're primarily intended for gaming rather than professional use. Apple stands out thanks to the Playground interface, which is fun and easy to use, but is lacking in the power of the AI that generates images. The entire process takes place locally on the iPhone, which likely limits the possibilities offered while heating up the device and reducing its battery life. We might have liked Apple to delegate image generation to a third-party AI, such as ChatGPT.

Ultimately, we find that Genmoji, whose promises are less ambitious, is more successful than Playground. Integrated into iMessage, Genmoji has allowed us to enrich some of our conversations with our loved ones. It's quite fun, but, once again, we don't use the option regularly after the discovery phase.

Because of the beta, we encountered a lot of bugs while using these features. This was especially the case with PlayGround, which simply refused to generate a single image for days. A second beta update was required to regain access to the features.

ChatGPT, Apple Intelligence's flagship asset

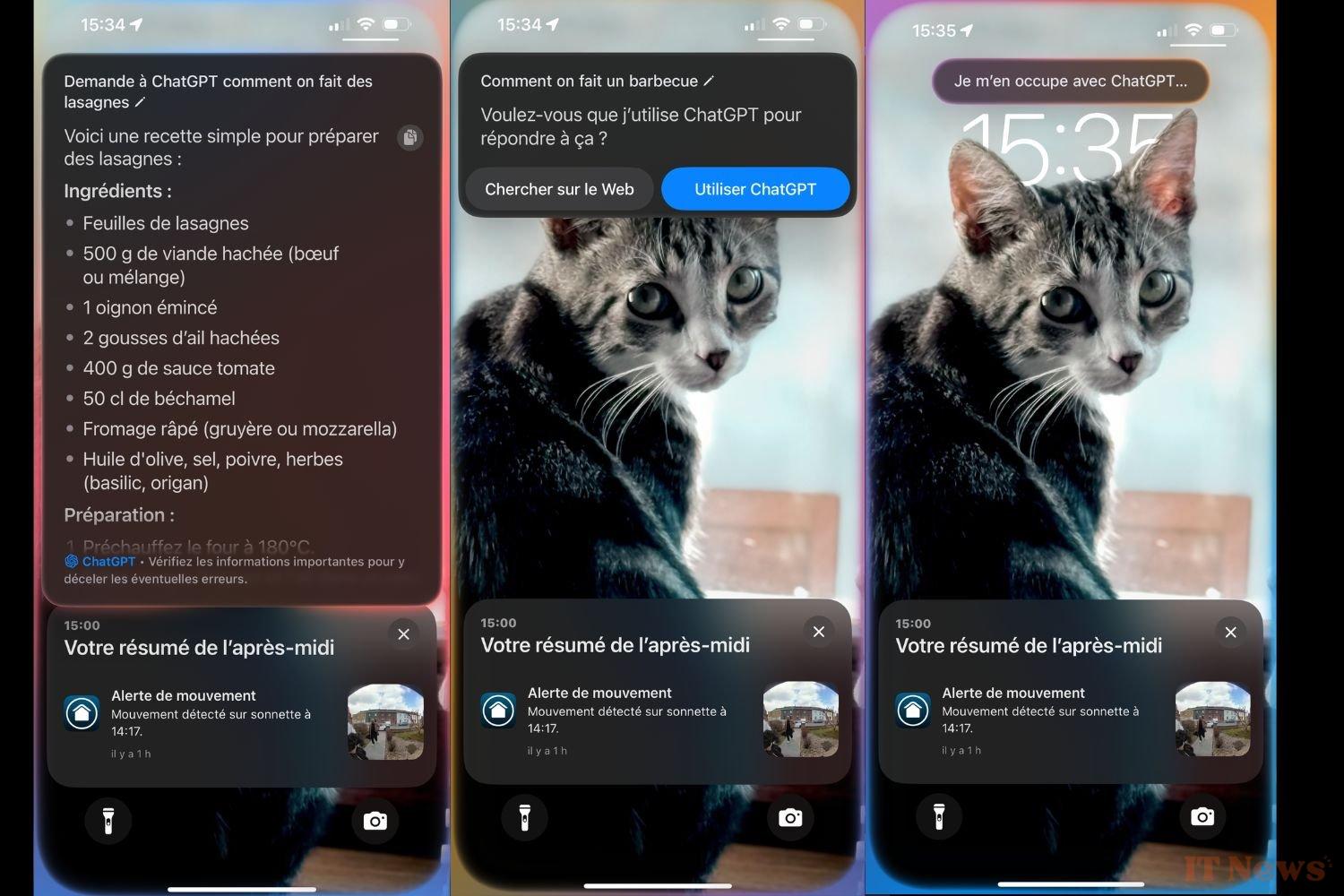

Integrated into iOS, ChatGPT is designed to assist Siri when it is unable to answer users' questions. In fact, Siri doesn't yet have the reflex to ask ChatGPT for help as soon as necessary. The voice assistant often simply responds that it is unable to help the user. To summon OpenAI's chatbot, you often have to ask Siri to call on the generative AI by clearly asking it to ask ChatGPT the question. This is particularly sluggish. With the second beta deployed a few days ago, the situation has significantly improved. Now, Siri tends to summon ChatGPT more often when needed, but its integration still has room for improvement. We arrive at a situation where a voice assistant answers us with a block of text...

This is far from being a revolution given that ChatGPT has already been available on the iPhone via the OpenAI application since last year. We used to call ChatGPT via the Action button on our iPhone, thanks to the very practical Shortcuts application, or on the home screen via a widget.

Despite our efforts, the reflex to ask ChatGPT for information via Siri hasn't become a part of our daily lives. However, the presence of ChatGPT makes perfect sense in writing tools or Visual Intelligence. We can very easily obtain high-quality content to enrich our text messages or emails without leaving the application. In fact, ChatGPT remains Apple Intelligence's biggest strength. It's the iPhone's main asset in the field of AI... This is indicative of how far Apple has fallen behind in this area.

With ChatGPT, we would have liked Apple to go even further. We would have liked OpenAI's AI to be truly integrated into all layers of the OS, so that we could easily call it up when using any application, or to entrust it with more complex tasks. Apple would do well to take the logic of Visual Intelligence, within which ChatGPT intervenes smoothly and automatically, and extend it to the entire operating system.

A key feature still missing

Let's keep in mind that this is still only a beta version. This is why Apple Intelligence's French functions are riddled with bugs, slowness, and other glitches. Furthermore, it's worth remembering that some of the flagship features are not yet available, months after the announcement. This is especially the case for Siri 2.0, which is still a long way off. By Apple's admission, the development of the voice assistant is taking longer than expected. The new Siri won't be ready until next year. Apple Intelligence will therefore remain deprived of its main functionality for quite some time.

Over time, Apple Intelligence could well evolve and take a more important place in our daily lives. As it stands, Apple-style AI is just a gimmick. The future Siri could well change the game by unifying the operating system with AI features, but it's not ready to show up just yet...

Extremely low usage

Apple is not fooled. As Mark Gurman, a journalist at Bloomberg, reports, the Californian giant has realized that iPhone users are not making extensive use of Apple Intelligence features. According to the company's internal data, Apple Intelligence usage is extremely low. Despite Apple's marketing efforts, AI hasn't become a new selling point likely to boost iPhone sales. Users tend to shy away from the iPhone's AI features.

Despite Apple's promises, Apple Intelligence hasn't had a real impact on how we use our iPhones. In fact, our usage has remained anecdotal. It often takes several days before we use Apple Intelligence. A colleague even noticed that Apple Intelligence had been disabled, without realizing it. This anecdote illustrates the extent to which Apple's AI lacks impact.

An Overly Cautious Approach?

The current failure of Apple Intelligence stems primarily from Apple's deployment schedule, which has fallen significantly behind schedule. The company has chosen to roll out its AI features in a piecemeal manner. As a result, the currently available version of Apple Intelligence is incomplete. As a result, it often feels like we're testing a solution that's still in development. Apple's decision to rely largely on AI models that run locally, rather than on remote servers, also contributes to limiting the capabilities available. This approach aims to protect user privacy, but it limits the ambitions of Apple Intelligence.

Moreover, Apple has remained a prisoner of its overly conservative approach. AI features were added superficially to the iPhone's operating system, without ever disrupting the fundamentals of the interface. With AI, Apple was able to revolutionize the way iOS works. Instead, Apple Intelligence was quietly, almost apologetically, integrated into the iPhone without disrupting our use. This is why Apple Intelligence hasn't changed our daily lives. According to rumors, iOS 19 should correct this situation with a real, and welcome, upgrade of the iPhone's interface in the age of AI. We're eagerly awaiting it.

0 Comments