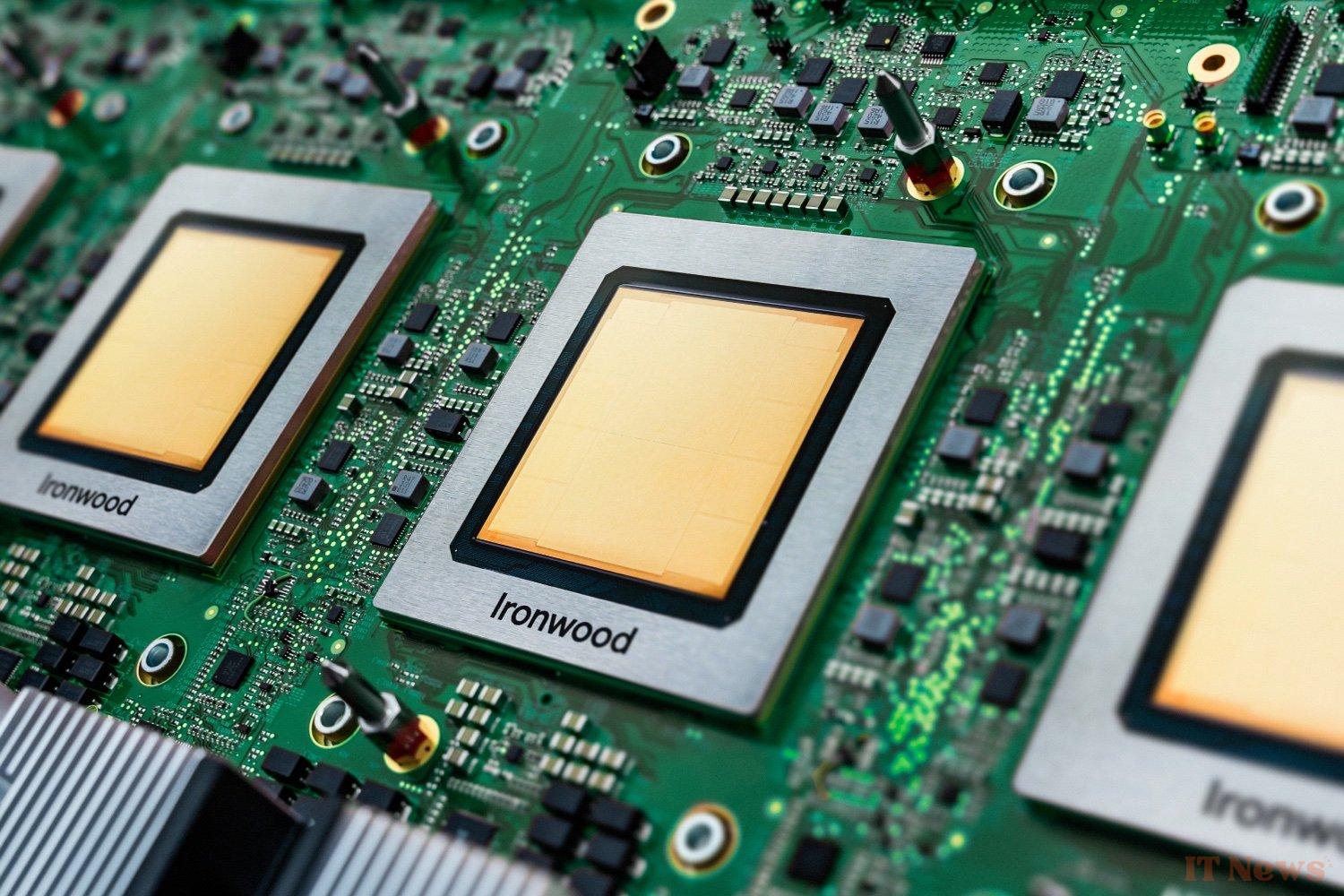

Google has unveiled Ironwood, its brand new in-house chip dedicated to artificial intelligence. And this time, it's not just a polish on the previous model. Ironwood was designed specifically for inference, that is, to run AIs after they've been trained. This is when the model, like ChatGPT or Gemini, answers the user's questions. This is a very demanding type of computation, and Google has clearly decided to go hard.

A chip to run AI models, not to train them

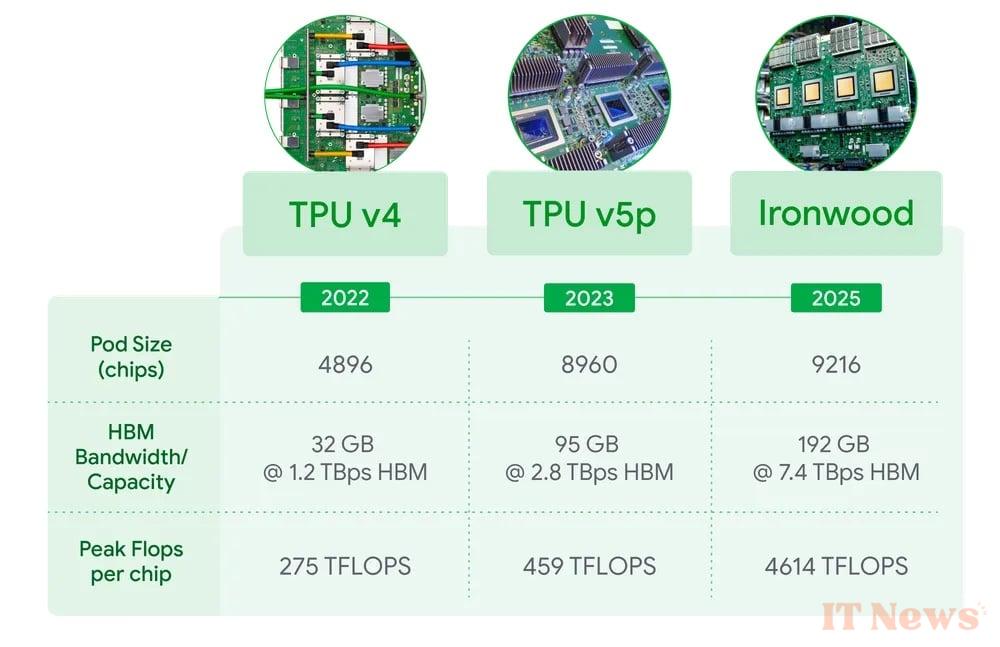

Ironwood is the seventh generation of Google's TPUs (Tensor Processing Units). These chips are not sold individually: they are used internally and made available to customers via Google Cloud. From a technical perspective, Ironwood combines several new features: each chip can reach 4,614 teraflops, and up to 9,216 chips can be grouped together to form a giant "pod." At this scale, the system reaches 42.5 exaflops, which far exceeds the most powerful supercomputers of the moment.

The chip architecture has been redesigned to avoid bottlenecks. More memory (192 GB per chip), more throughput (7.2 TB/s of bandwidth), better communication between chips... everything is there to ensure that huge AI models can run smoothly. And despite With this ramp-up, Ironwood is twice as energy-efficient as Trillium, the previous generation.

With Ironwood, Google is primarily targeting companies that want to run complex AI in the cloud. The group highlights its ten years of experience in the field, and the fact that its own models, such as Gemini and AlphaFold, already run on these chips.

Google also offers a software environment called Pathways, developed by DeepMind, which makes it easy to control thousands of chips in parallel. This makes it possible to run very demanding models without having to worry about the infrastructure. Ironwood even integrates a specialized component, called SparseCore, to accelerate certain types of calculations, for example in recommendation systems. Something that might interest the finance, research, and e-commerce sectors.

So, no direct sales on the horizon. But in the race for AI power, Google is clearly placing its bets!

0 Comments