Google is apparently rolling out what the firm called “Project Astra” a little less than a year ago: the ability for the Gemini AI to analyze a live video stream.

Artificial intelligences like OpenAI's ChatGPT or Google's Gemini can respond to our text queries of course, but also vocal or even image-based. That is, when we provide a photo or an illustration to the AI. All that's missing is video, which Google soon wants to offer with Gemini on Android. It turns out that an Internet user answering to the pseudonym Kien_PS has had access to it on his Xiaomi smartphone for a few days as a subscriber to Gemini Advanced (21.99 euros/month). Here's what it looks like.

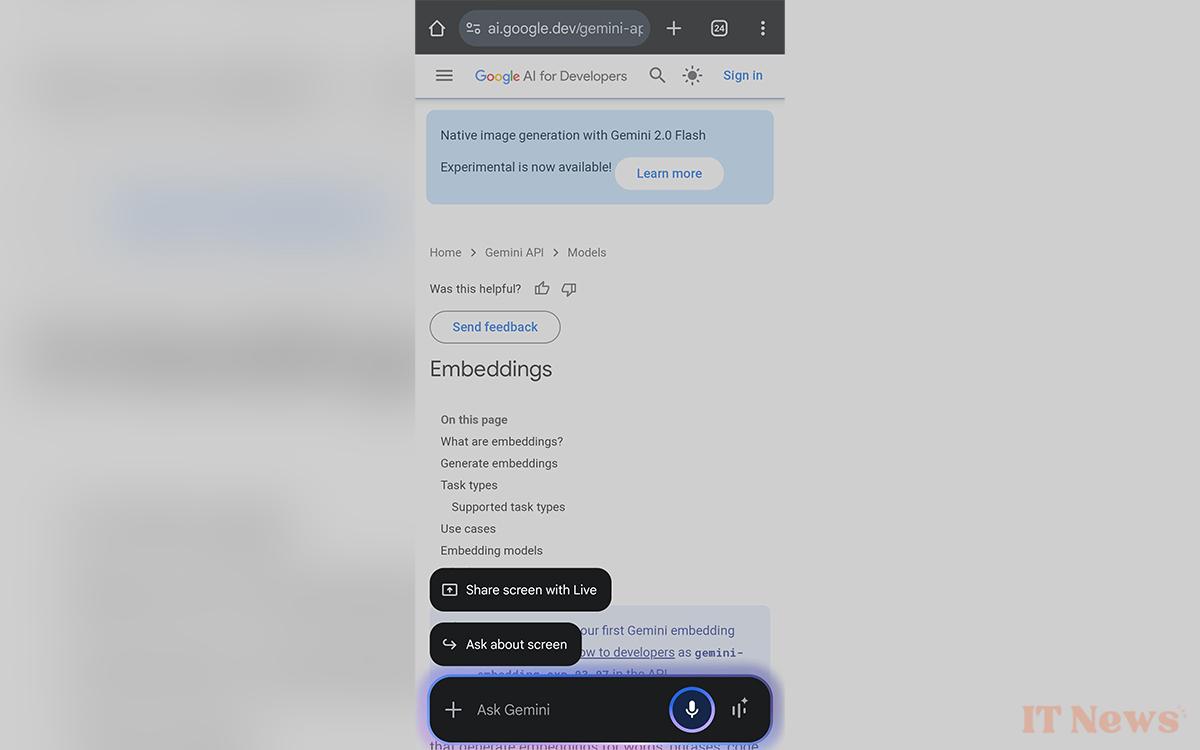

The Ask about screen button is the one we already know. The new one is located just above and could be translated as Share screen with Live (or Direct). Pressing it allows you to film your surroundings to ask Gemini about what the screen is showing at a given moment.

Gemini gains eyes to analyze your videos in real time

Google had demonstrated this feature almost a year ago now, under the name “Project Astra”. It gives a very good idea of the possibilities to come. We can see a person triggering the camera of their smartphone and asking Gemini to tell them when the AI “sees” a device that makes sound, which it does when the user films a speaker. It also draws an arrow on the speaker to get the name of a part of the device, then shows it a computer screen displaying code and asks what it is for.

Read also – Gemini can now play YouTube videos for you, here's how it works

The possibilities are endless. This can be particularly useful when traveling, for example, but also at work. Especially if Gemini is integrated into smart glasses, leaving your hands free. Given the video posted on Reddit by user Kien_PS, it's clear that the feature that's apparently being rolled out isn't quite as good as Google's demo yet. It's still unclear when it'll be available in our country.

0 Comments