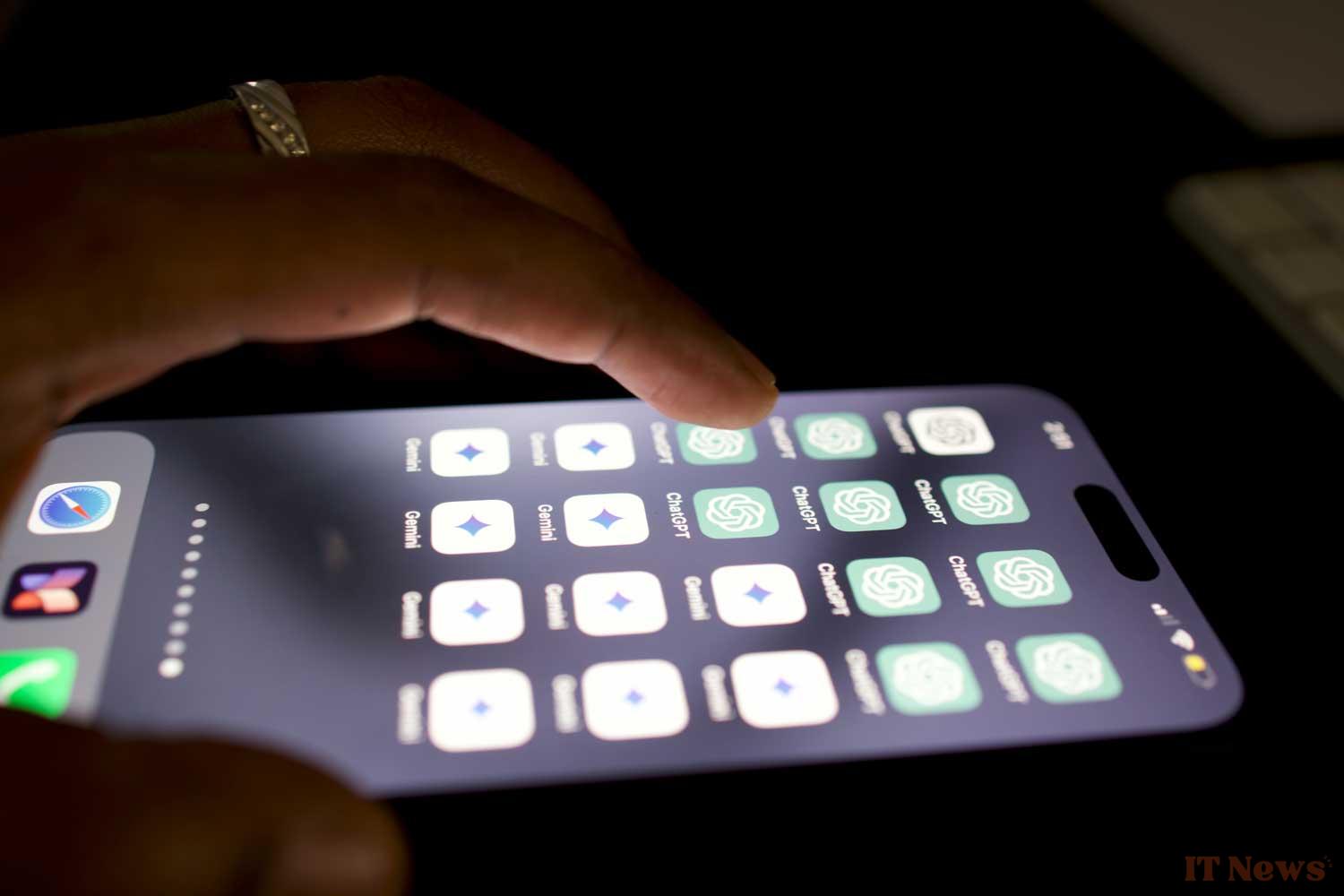

Conversational artificial intelligence has become an increasingly used component of our daily lives. Giants like OpenAI with ChatGPT, Google with Gemini, not to mention DeepSeek, Perplexity, and Mistral AI with Le Chat, are competing in ingenuity to attract ever more users. Praised for their ability to produce texts, images, provide answers, and even imitate human exchanges, these generative AIs could, however, conceal an insidious trap: dependence among some intensive users. This is the serious warning that emanates from a recent collaborative study conducted by researchers from OpenAI, the company behind ChatGPT, and the prestigious MIT Media Lab.

The results of this research, based on an automated analysis of nearly 40 million interactions with ChatGPT, highlight a worrying phenomenon. Some intensive users, that is, those who interact most frequently and for the longest time with ChatGPT, would develop a form of dependence, or even addiction. To confirm this theory, the researchers conducted a second study with 1,000 participants using ChatGPT for four weeks.

Based on these two studies, they identified "addiction indicators" among these "power users" of conversational AI. These signs are constant worry, withdrawal symptoms if not used, a loss of control over the duration of use, or even a change in mood depending on interactions with the AI.

It has now been proven that you can be addicted to ChatGPT

Researchers point out that this dependency seems to develop particularly among people suffering from loneliness or going through difficult times. This type of profile, whose personal life is less fulfilling, is more likely to consider ChatGPT as a "friend." In other words, those who most need human interaction would be the most likely to develop a deep parasocial relationship with AI.

While the consequences of such dependence are cause for concern, the study highlights surprising results. Unlike As one might expect, people who use ChatGPT for "personal" reasons, such as discussing their emotions or memories, are less emotionally dependent than those who use it for "non-personal" reasons, such as seeking advice or brainstorming. Furthermore, we learn that users tend to use more emotional language with the text version of ChatGPT than with voice mode. In an old report reprinted by Radio France, OpenAI had mentioned certain risks posed by the voice version of its AI. The audio features of the latest version of ChatGPT are also capable of making users "emotionally addicted."

As with social media, video games, porn, or simply screens, this study highlights the dangers of prolonged use. It also highlights the urgency of a deep reflection on our relationship with generative AI.

0 Comments