An upcoming beta of iOS 18.5 and macOS 15.5 (we're on the second one) will introduce a discreet but potentially decisive new feature to help Apple Intelligence compete with its rivals from OpenAI, Microsoft, Meta, and Google. The Apple company will begin analyzing certain data stored on its users' devices—such as recent email samples—to improve the performance of its generative AI platform.

Local email analysis, without transfer

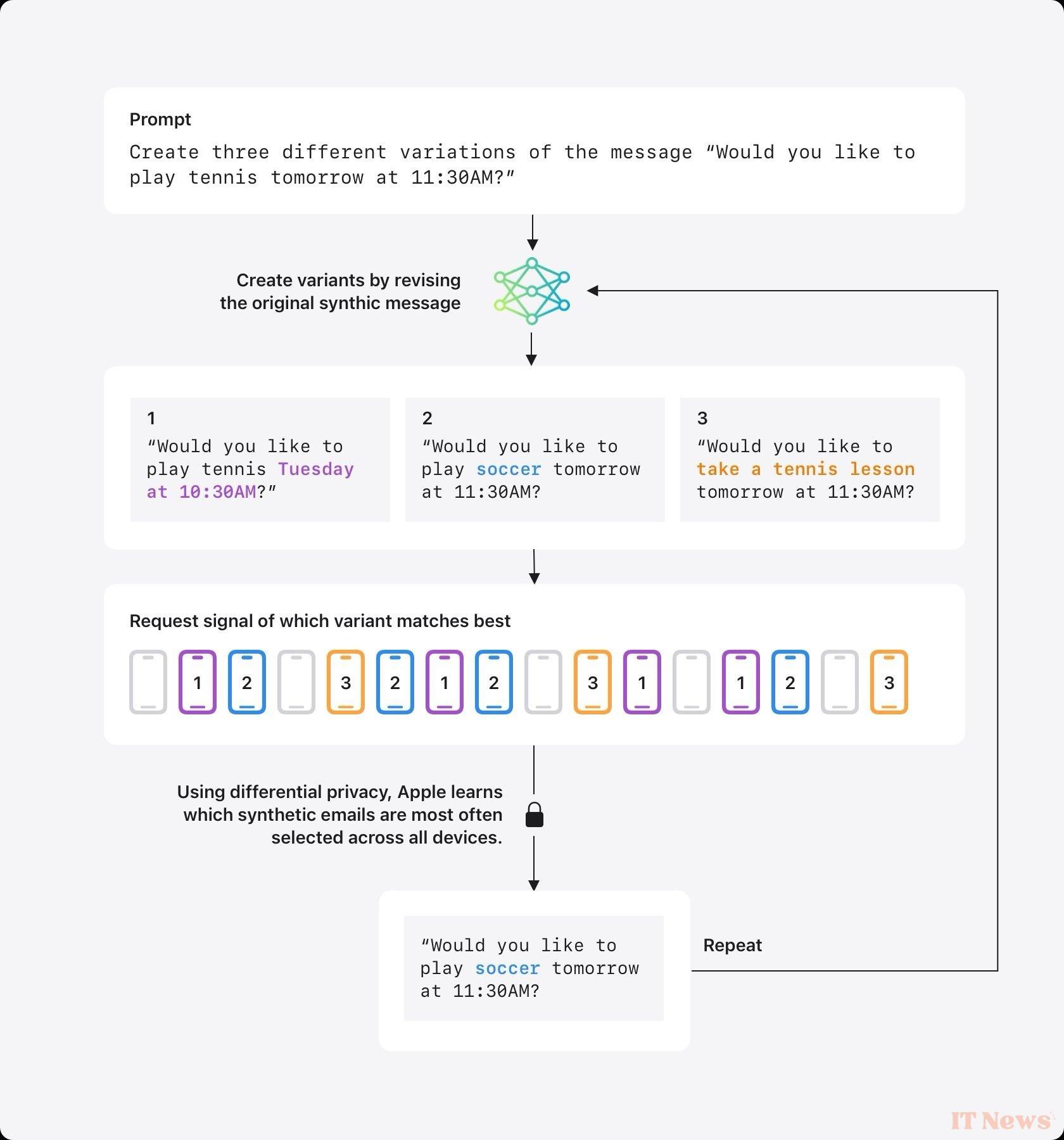

Until now, Apple's AI models have trained on synthetic data, in other words, information designed to "imitate" real data, without including any personal details so as not to be traced back to the user. This is a strong commitment from the computer manufacturer that stands out from the rest of the industry. Unfortunately, this synthetic data does not always reflect users' actual usage, reducing the effectiveness of Apple Intelligence features.

By using data stored locally on the user's iPhone, iPad, or Mac (data that is not transferred to Apple's servers), this new approach aims to correct the current limitations of the system implemented by the company. Including errors in the synthesis of notifications or content.

Here's how this new feature works:

- Apple generates fictitious emails using language models;

- These messages are analyzed by creating a fingerprint;

- Users' devices compare these fingerprints to real emails stored locally;

- A single, aggregated, anonymous signal is sent back to Apple, indicating the most relevant synthetic data.

It's important to remember that Apple doesn't "read" emails. The analysis is done locally, only to compare real messages to synthetic email fingerprints. Email content is never shared, and you must first agree to share analytics data with Apple (an option available in the Privacy & Security > Analysis & Improvement settings). This method allows the manufacturer to improve its models without collecting or viewing user messages.

By comparing synthetic data with the user's "real" emails, Apple hopes to improve its summarization, assisted writing (writing assistance tools), and message analysis tools. In addition to generating this synthetic data, Apple also relies on the principle of differential confidentiality, a pillar of its privacy policy.

For functions that are less demanding in terms of model, such as Genmoji for example, the group uses a "noisy" signal system: the devices respond in an anonymized manner to indicate whether a word or query is frequently used, but without ever transmitting exact or identifiable content.

This new feature is undoubtedly one of the first consequences of the changing of the guard within Apple's AI division. After the postponement of features that would have allowed Siri to give more personalized responses, the teams responsible for developing the AI are now overseen by Crag Federighi, the software's chief executive.

Source: Bloomberg

0 Comments