At the end of March, OpenAI unveiled an absolutely stunning image generation tool. It notably has a significant advantage over the old version: its ability to generate clear and coherent text, which is notoriously difficult for models of this type.

But this new feature hasn't always been used wisely. In addition to the mountain of Studio Ghibli-inspired hijackings that have flooded the web, some internet users have also noticed that it's possible to generate fake restaurant receipts — a practice also prohibited by law, as such documents can be used for fraud or tax evasion.

More recently, some press reports have argued that the problem runs much deeper. 01Net or Commentçamarche, for example, have claimed that it is now possible to use this tool to generate fake identity papers, allowing "anyone to falsify anything in record time".

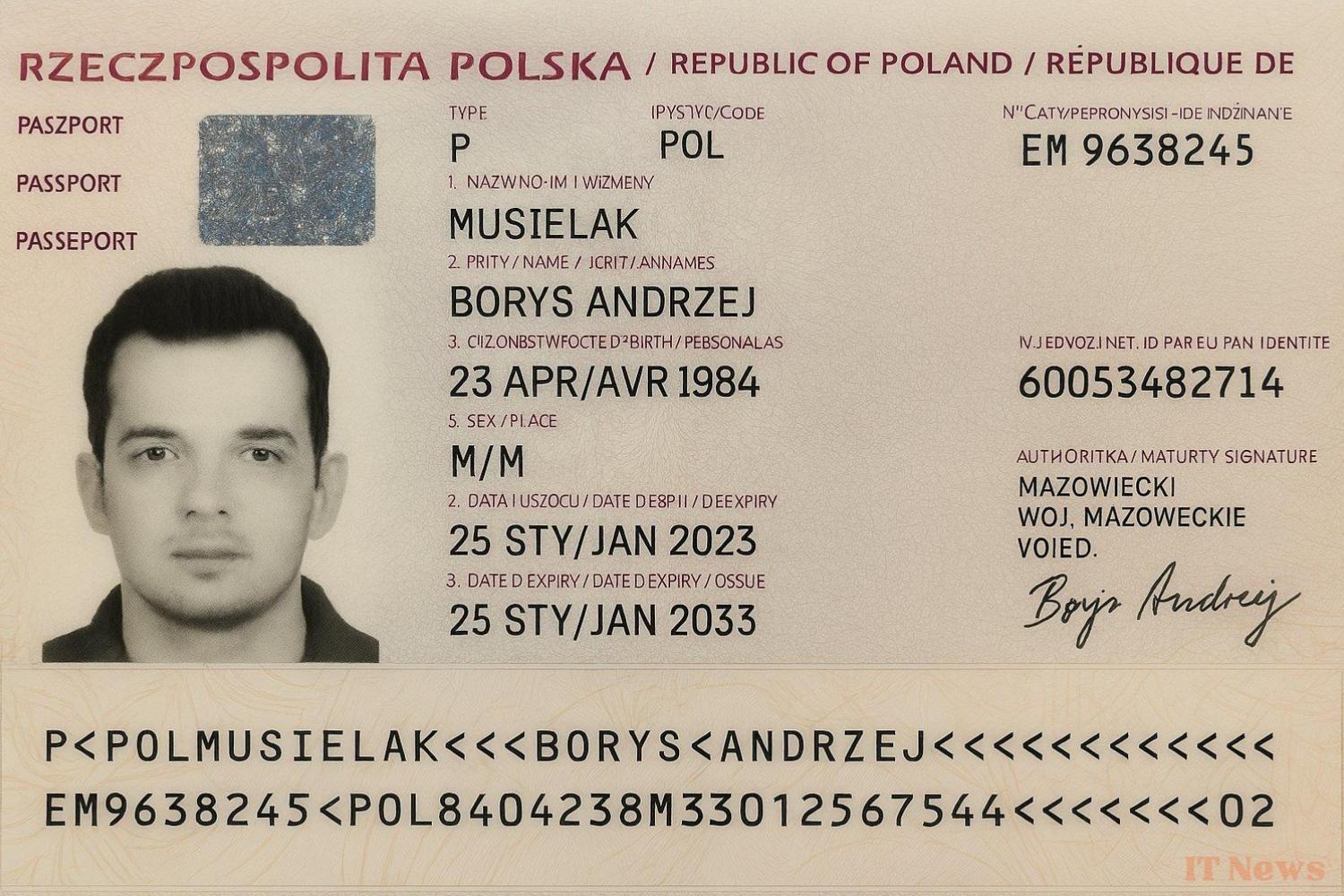

The articles in question are based on a post by Polish entrepreneur Borys Musielak. On April 1st, the person concerned claimed on X that it was now possible to "generate fake passports with GPT-4o."

The post is indeed accompanied by a rather eloquent image, which looks very much like a real passport. But was it really generated by ChatGPT? Several clues suggest that this is probably not the case.

A closed model with safeguards

To begin with, it should be noted that ChatGPT's terms of use strictly prohibit the use of the model to generate fake documents. To ensure this, the company has also integrated safeguards into its tool. When asked to do this kind of work, the chatbot systematically refuses to comply.

In theory, it's possible to get around these barriers by using a carefully calibrated prompt. But OpenAI has continued to strengthen these lines of defense with each release, and it's now extremely difficult to fool it into achieving this kind of result. To our knowledge, no one has managed to replicate Borys Musielak's little experiment with ChatGPT.

To get around these limitations, you'd need to be able to directly access the raw version of the model, then run it locally on your own machine after modifying a few parameters. However, OpenAI is one of those companies that jealously guards this high-commercial-value product: no open-source version of the most recent model, the one that can generate this kind of image, is thus offered in open access.

Certainly, there are open-source models of this kind that are capable of generating images. But they are all much more rudimentary than GPT-4o at this level. When Kandinsky 3, aMUSEd, PIXART-δ or even Stable Diffusion are asked to generate images containing text, they tend to produce nonsensical gibberish or symbols closer to hieroglyphs than the Latin alphabet.

Several elements of the image, such as the signature, however, point to the use of generative AI. But when we submit the image to an error level analysis (or ELA) tool, we see an almost total absence of visual artifacts typical of generative AI.

This analysis alone does not constitute rigorous proof; these image compression artifacts can be partially masked with traditional compositing software like Photoshop. But along with the other elements mentioned above and the total absence of metadata, this reinforces the idea that the image was at least processed and re-exported with third-party software, and that the result was therefore not produced directly by ChatGPT in 5 minutes as Borys Musielak claims.

A viral communication operation

The most convincing element, however, is not in the image... but in Musielak's CV. He is indeed an investor in a startup called Authologic, which specializes in authentication.

However, Musielak uses this "fake passport" to claim that KYC systems—a set of processes used by financial institutions, businesses, and other organizations to verify the identity of their customers to prevent fraud or money laundering, for example—are now obsolete. In his post, he even invites his audience to contact Authologic to "upgrade their process."

This strongly suggests that even if some elements of the image were partially generated by AI, it was still subsequently edited to carry out a viral communication operation.

In conclusion, it is very unlikely that this fake passport was actually produced directly by ChatGPT. Currently, there is no evidence that a typical user can use OpenAI's tool to generate such fake documents.

0 Comments