AI is everywhere, and medicine is no exception. According to a 2024 report by Medscape, more and more French practitioners are using ChatGPT and other consumer AI in their daily practice... This represents a serious risk to your medical data.

As we know, AI is everywhere. Healthcare is no exception. In just a few years, artificial intelligence has already revolutionized care in certain specialties such as oncology, radiology, and ophthalmology. For their part, the big names in tech are already looking to integrate AI into their healthcare services. Apple, for example, has announced the arrival of an AI agent in its Health app. The idea is to transform it into a true virtual medical assistant, capable of analyzing users' medical data.

At Google, the American firm's teams are currently testing Articulate Medical Intelligence Explorer, a generative AI designed to diagnose medical conditions through a simple conversation.

Therefore, you probably won't be surprised to learn that many French practitioners are already using consumer AI like ChatGPT in their daily practice. Medscape, a specialist website reserved for healthcare professionals, published a survey in October 2024 called “French Doctors and AI”.

50% of French doctors use AI every day

To summarize, the report focuses on doctors' fears about AI, their aspirations, and their usage habits. Among all the figures presented, one caught our attention. Specifically, 50% of French healthcare professionals surveyed (around 1,000 people) in the survey currently use ChatGPT or another consumer AI in their work:

- 20% to research pathologies

- 12% to perform administrative tasks

- 11% to diagnose pathologies

- 9% to manage patient appointments

- 9% to complete electronic health records

- 8% to write a consultation report

The problem is that these consumer AIs like ChatGPT and others were absolutely not designed to be used in a medical setting. This is because they do not comply with the health data security and confidentiality standards set in particular by the GDPR or the AI Act, the new legal framework set by the EU on AI.

Also read: Why AI can save the Health Insurance from its imminent collapse

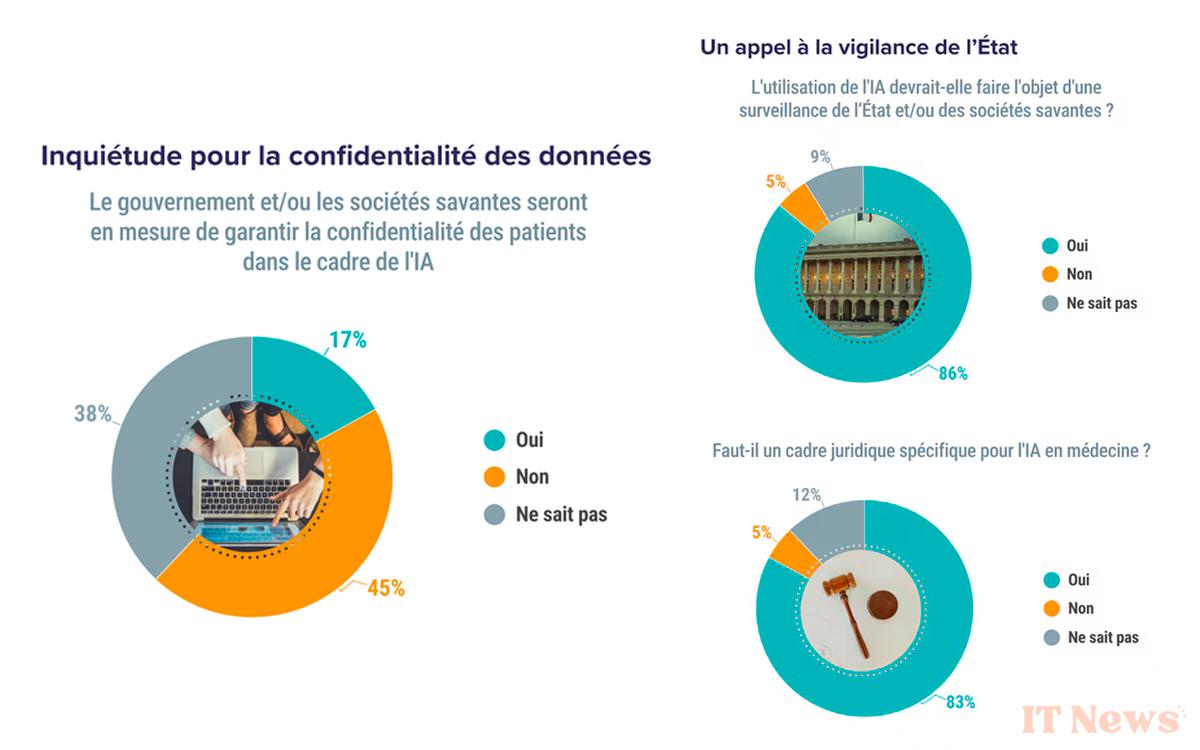

Data Privacy Concerns

Some practitioners are also aware of the problem: “There are too many possibilities for hacking, the French IT network is not reliable enough, nor robust enough,” assures a public health doctor in the Medscape survey. “IT is not 100% reliable and I fear the risk of bugs, but also attempts at hacking and data theft,” adds another specialist based in PACA.

This is why 83% of the doctors surveyed are calling for the regulated and controlled use of AI by public authorities. The AI Act is intended to address these concerns by imposing strict obligations on AI systems operating in high-risk sectors such as healthcare, energy, and transportation, for example.

Although the AI Act officially came into force on August 1, 2024, it will be fully applicable by August 2026 at the latest. And even then, the rules relating to high-risk AI systems will not come into force until August 2027. Therefore, doctors who use ChatGPT or other consumer AI may still put their patients' data at risk.

Doctors are taking the lead

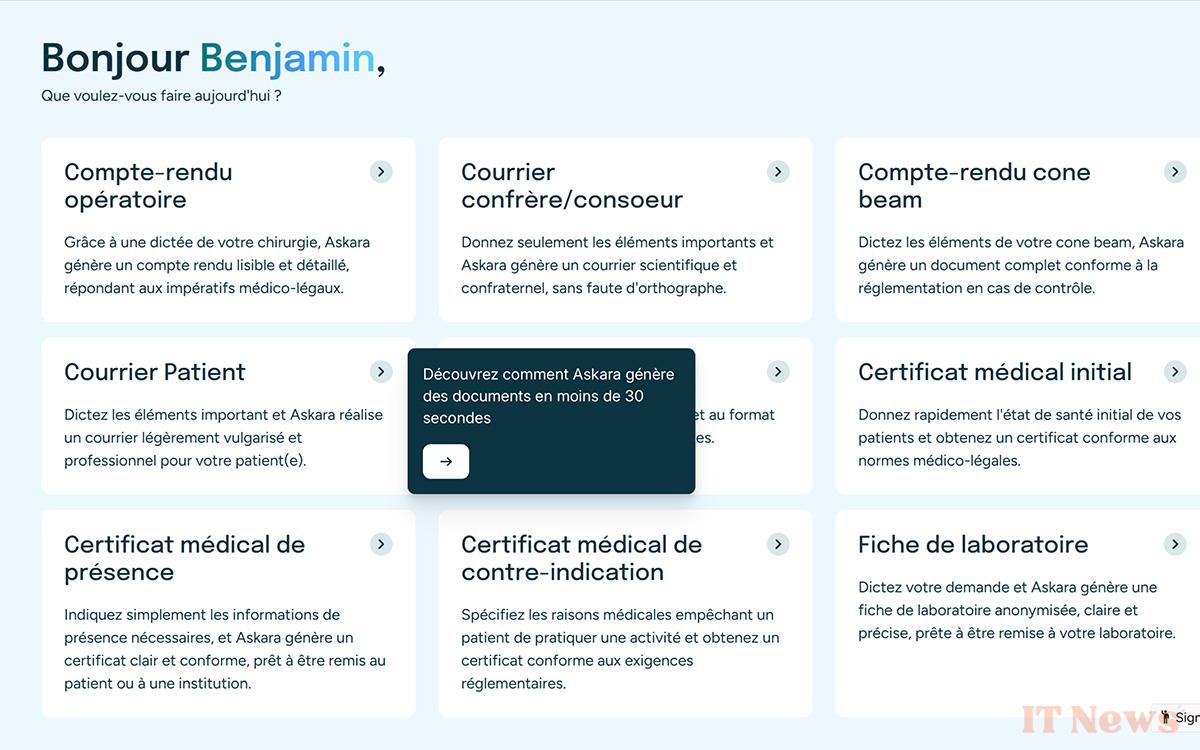

While waiting for European and French authorities to definitively regulate the use of AI in the medical field, some practitioners have decided to take action. This is notably the case of Frank Bézu and Benjamin Fitouchi, two French dental surgeons.

Faced with the lack of AI solutions specifically dedicated to healthcare professionals, these two technology enthusiasts designed Askara, an intelligent voice assistant designed for dentists. The idea is simple: doctors dictate their conclusions or reports orally, and the AI takes care of synthesizing and formatting everything in a few seconds. It can also produce certain documents, such as prescriptions, initial medical certificates, or even laboratory records.

A 100% secure French AI for practitioners

In itself, ChatGPT can do all of this very well with the right prompts. Where Askara differs is, of course, in data processing. First of all, all patient information is stored in France on the ultra-secure servers of Scalingo (Strasbourg). Certified ISO 27001 and HDS (for Health Data Hosts), the platform counts among its clients the Ministry of the Interior and Ile-de-France Mobilités.

Furthermore, Askara also strictly complies with the GDPR and does not contain any trackers or analysis tools. Therefore, patients/practitioners can, if they wish, request the right to access, modify, and delete their personal data. At the time of writing, Askara is intended solely for dental practice management, but the project could very well expand to other specialties. In any case, it is imperative that healthcare professionals turn to this type of initiative rather than ChatGPT and other consumer AI. The very security of their patients' data depends on it.

0 Comments