Unsurprisingly, Apple took advantage of the opening keynote of WWDC 25 to announce a myriad of new features within Apple Intelligence. First, the Cupertino group indicates that application developers will be able to take advantage of the AI model that underpins the suite of tools.

As explained by Craig Federighi, Apple's senior vice president of software engineering, developers will be able to use the group's artificial intelligence to create "new intelligent experiences" within their apps. This is a "major step" that will "pave the way for a new generation of intelligent experiences in everyday applications," the executive believes.

Then, Apple unveiled a slew of improvements intended to enrich Apple Intelligence, whose launch met with limited success. After religiously listening to the WWDC launch conference and combing through all of Apple's communications, we've pinpointed the three main changes to the suite of tools.

Live translation on the iPhone

Number one is the arrival of live translation within all of Apple's applications. Using Apple's AI models, which run locally on the device, Apple Intelligence will automatically translate all communications.

Integrated into the Messages app, the AI will translate all messages into a foreign language. The iPhone will automatically translate messages written by the user, as well as the response received. In short, AI will allow two people who don't speak the same language to communicate.

The same story applies to a FaceTime call. Apple's AI will display subtitles to help you understand what the other person is saying. During a traditional phone call, Apple will dubbed the other person's words. You'll hear what the other person is saying in your own language, as if a live interpreter were doing it.

Live translation in Messages supports English, French, German, Italian, Japanese, Korean, Portuguese, Spanish, and Simplified Chinese. On the call side, the feature is only available for one-to-one calls in English, French, German, Portuguese, and Spanish. Other languages are expected to be added in the future.

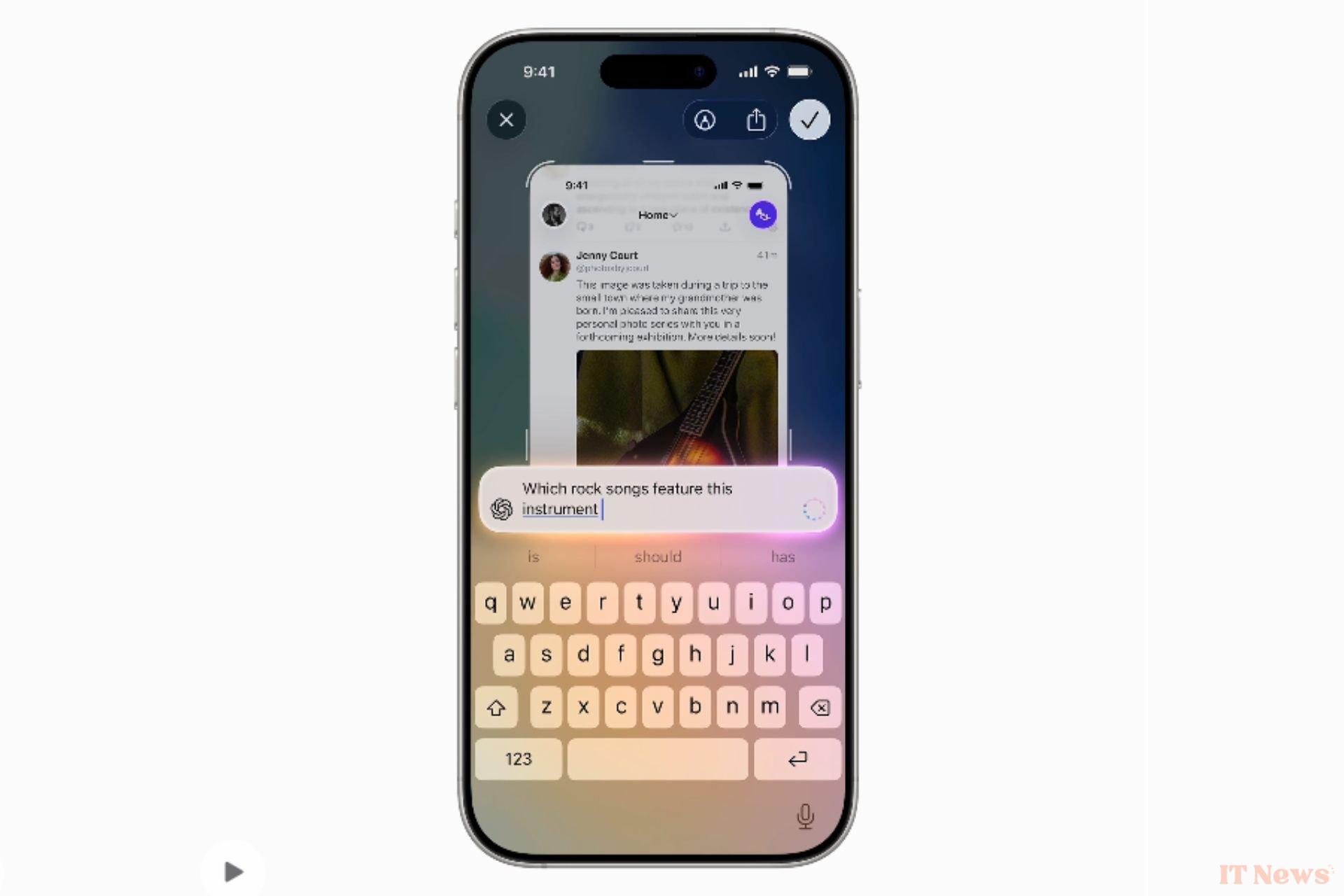

Visual Intelligence extends to your iPhone screen

Other new features in Apple Intelligence include the expansion of Visual Intelligence, one of the best AI-powered tools available on the iPhone. Using the camera, you can ask AI to analyze what you see and provide you with real-time information.

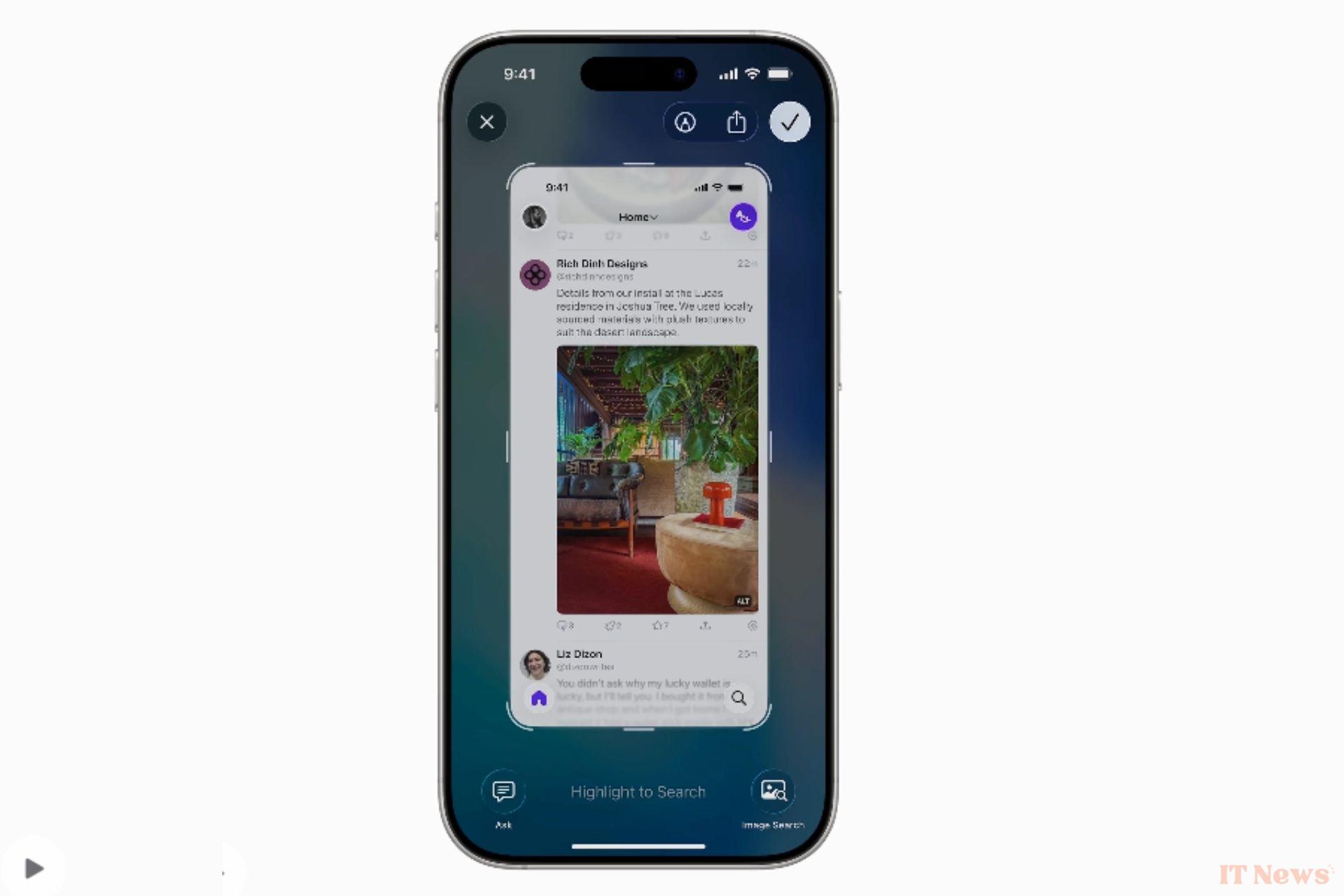

From now on, Visual Intelligence won't just scan your surroundings. The feature will also allow AI to view "a user's iPhone screen so it can search for and act on anything they view in their apps." While browsing a social network, an email, or the web, you can ask ChatGPT for information about what you see.

Apple also lets you search "Google, Etsy, or other supported apps to find similar images and products" to those displayed on the screen. This is ideal for helping you with your shopping trip. If you're interested in an item, like a lamp, the AI will search for that item or similar items online for you.

That's not all. Visual Intelligence will also scan the screen for potential events to add to the calendar. Once an event is found, the AI will ask you if you want to add it to your calendar. Apple Intelligence can then extract the date, time, and location and create the appropriate event. The feature is similar to the option that analyzes your messages and emails to find potential upcoming events. You can activate visual intelligence to analyze everything that appears on the screen by pressing the buttons used to take a screenshot, namely the lock button and the + button.

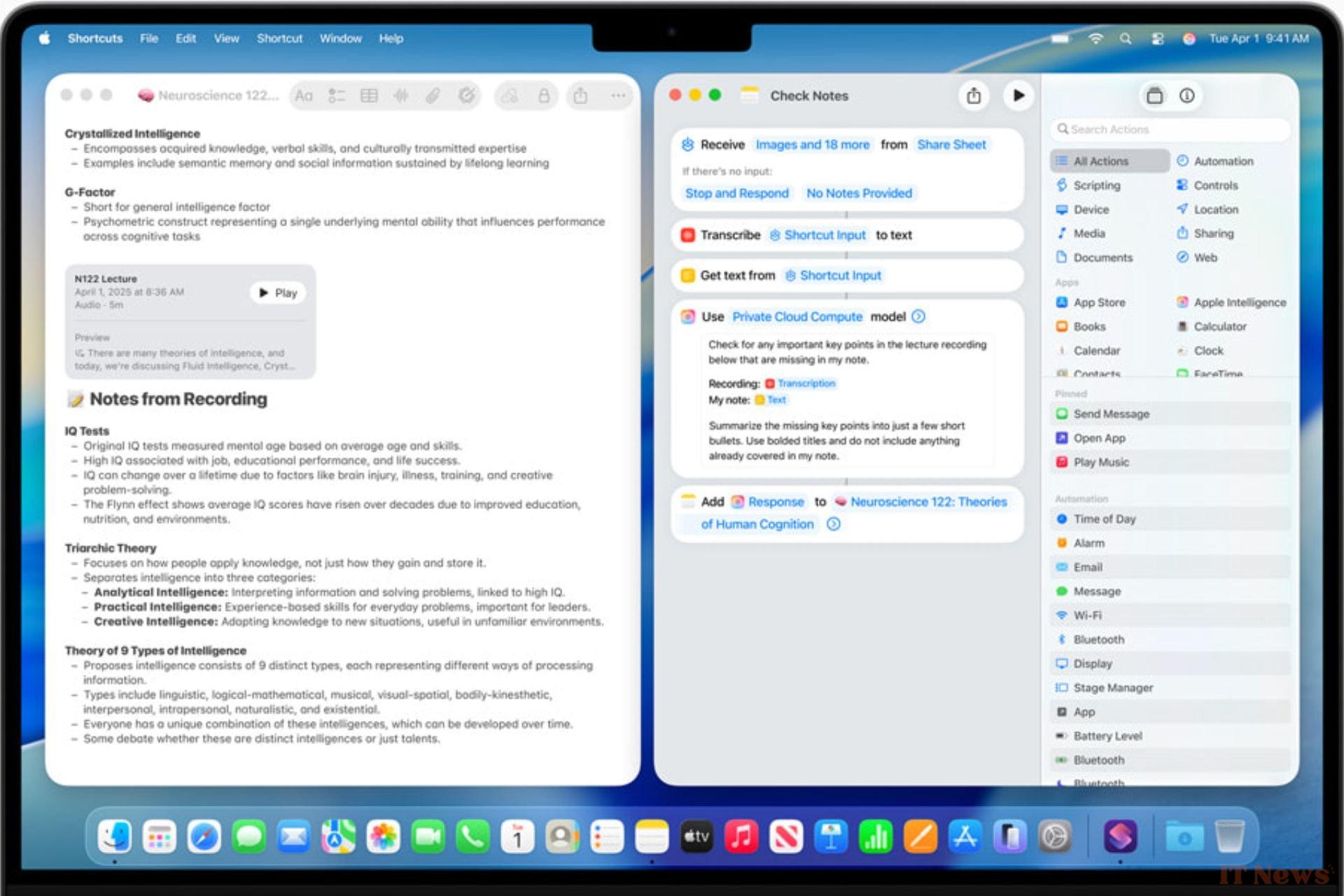

Shortcuts boosted by Apple Intelligence

Finally, Apple announced the arrival of Apple Intelligence within the Shortcuts application, which allows you to automate actions on your iPhone, iPad, or Mac. As the Californian group explains, Shortcuts will be able to take advantage of Apple's AI models, whether locally or in the cloud. Users will be able to "generate responses that serve to advance the rest of the shortcut" using AI, or even ChatGPT. In other words, the answers provided by the AI will be incorporated into the flow of the shortcut.

A student can create a shortcut that uses AI to compare the audio recording of their lecture to their own notes. The shortcut can also include a function that will automatically add the comments they forgot to write down to their transcript. Apple calls shortcuts powered by Apple Intelligence "smart actions". By default, Apple will offer shortcuts that can summarize text or generate text. On paper, the arrival of Apple Intelligence in Shortcuts looks very promising. By combining shortcuts with AI, Apple could even give a new dimension to the AI tools available for several months on its devices.

All these features can be tested now by installing one of the developer beta updates, such as iOS 26 on the iPhone.

0 Comments