OpenAI, the startup behind ChatGPT, is sounding the alarm. In a blog post published on Wednesday, June 18, 2025, the American company explains that it expects future AI models to be able to help Internet users design biological weapons. In the hands of ill-informed or inexperienced people, artificial intelligence could increase the risk of uncontrolled biological weapons.

Last April, OpenAI had already raised the alarm about the risks posed by AI in terms of pathogens for military use. To prevent abuse, Sam Altman's startup had taken steps to prevent the models underlying ChatGPT from providing advice about biological threats. OpenAI has built safeguards into the code of o3 and o4-mini, its two reasoning models.

OpenAI fears "elevation of novices"

According to OpenAI, the danger posed by AI models in the field of biological agents is about to escalate. Interviewed by Axios, Johannes Heidecke, head of security systems at OpenAI, indicates that the firm expects that "some of the successors" to the o3 model will be able to guide the user in the creation of a biological weapon. The startup fears a phenomenon it calls "the rise of the novice". In short, AI could give anyone the ability to create potentially dangerous substances, without understanding the risks.

In its report, the artificial intelligence giant indicates that it has decided to increase the testing of its AI models currently under development. OpenAI's risk mitigation plan includes assessing each model, including potential abuses, before any deployment. As part of these assessments, the firm commits to consulting with recognized experts and public authorities. The process requires approval from two oversight bodies, one internal and one external. Both bodies must approve before an AI model is released.

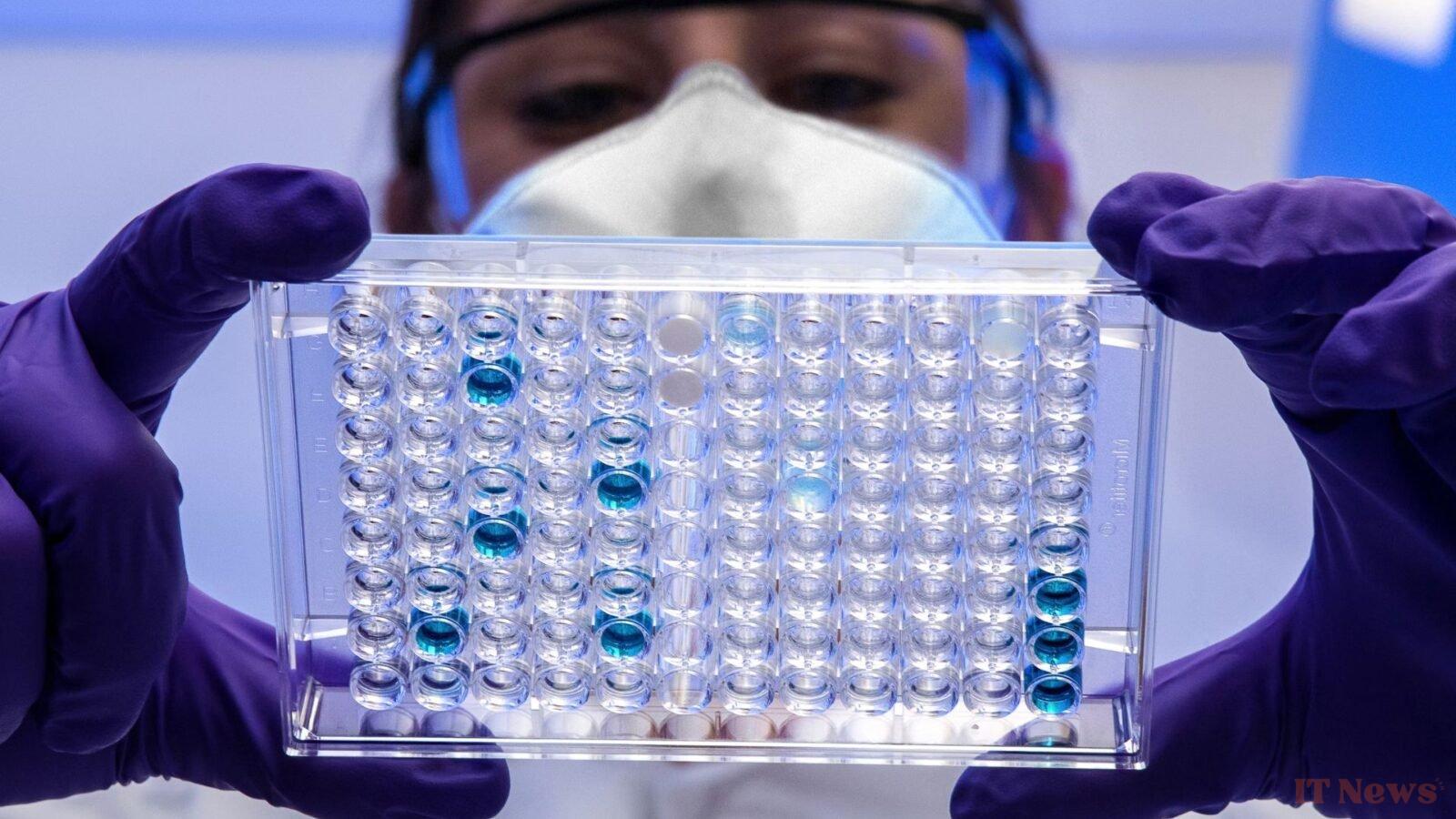

Furthermore, new security measures have been implemented to block all requests for assistance in virus creation. When a potentially harmful request is detected, OpenAI suspends the AI's response. The situation will then be assessed by an algorithm, and then by a human.

As Johannes Heidecke explains, "We are not yet in a world where there is a completely new and unknown creation of biological threats that did not exist before." At this stage, the models are likely to facilitate the "reproduction of things that experts already know very well" by untrained individuals.

A balancing act

As always, OpenAI seeks to find the right balance between precaution and innovation. The startup points out that the AI functions that could lead to the design of biological weapons are the same ones that are likely to lead to major medical breakthroughs. Future AI models could "accelerate the discovery of new drugs, design more effective vaccines, create enzymes for sustainable fuels, and develop innovative treatments for rare diseases," OpenAI says.

0 Comments