One of the main drawbacks of using conventional chatbots like ChatGPT and Gemini is that the confidentiality of your exchanges is not guaranteed. And for good reason, all the requests you make when chatting with a chatbot online pass through the servers of the platform providing the service. To avoid this, it is therefore advisable to use an offline generative AI, executed locally on your device. This is exactly what Google offers with the AI Edge Gallery.

Large language models executed locally on your smartphone

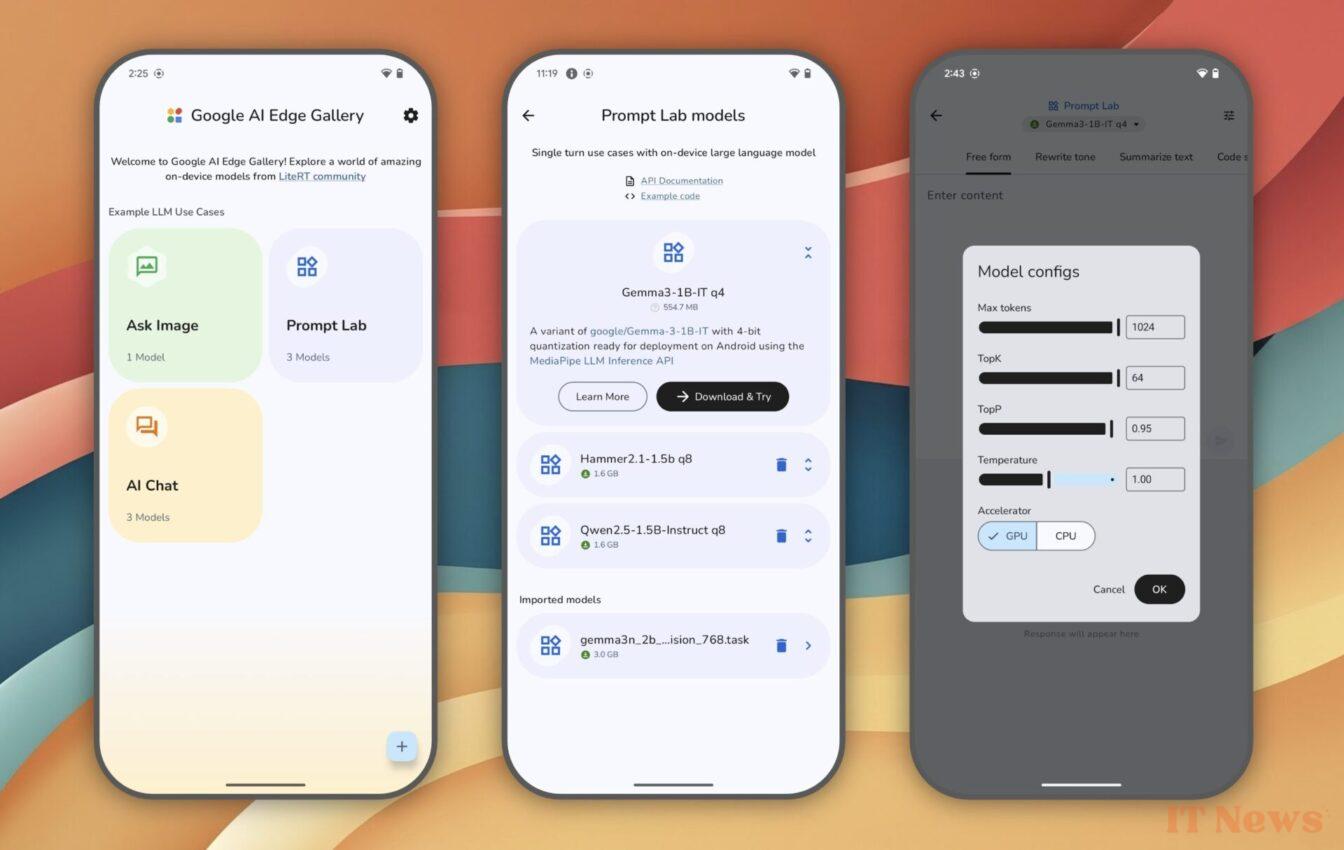

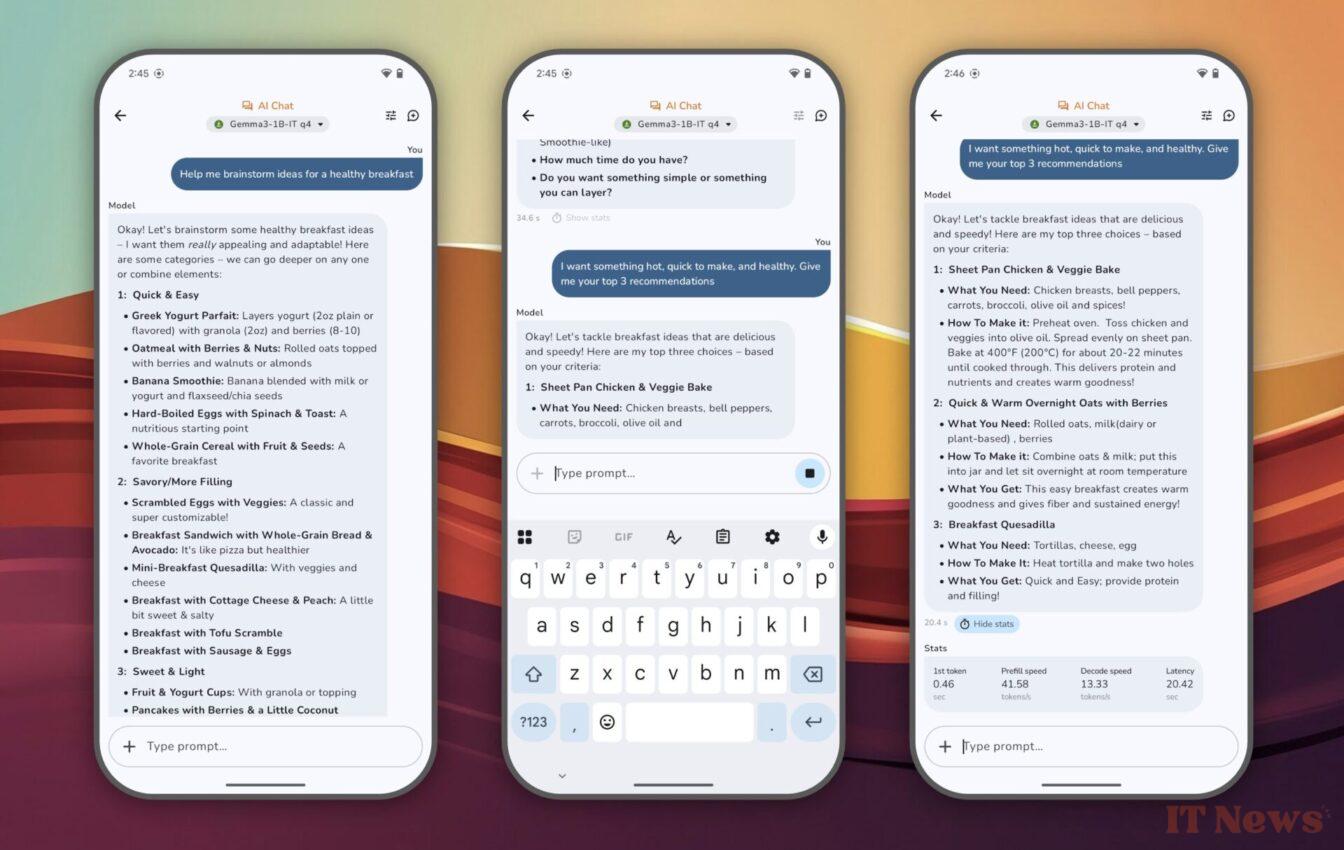

Posted online on Github, Google's AI Edge Gallery is an experimental application whose goal is to provide a turnkey solution to allow you to execute large language models locally on your smartphone. The app, which is already available on Android and will be available on iOS very soon, offers nothing less than the ability to download different language models locally onto your smartphone. This allows you to use them entirely offline, with query processing being handled entirely on your device.

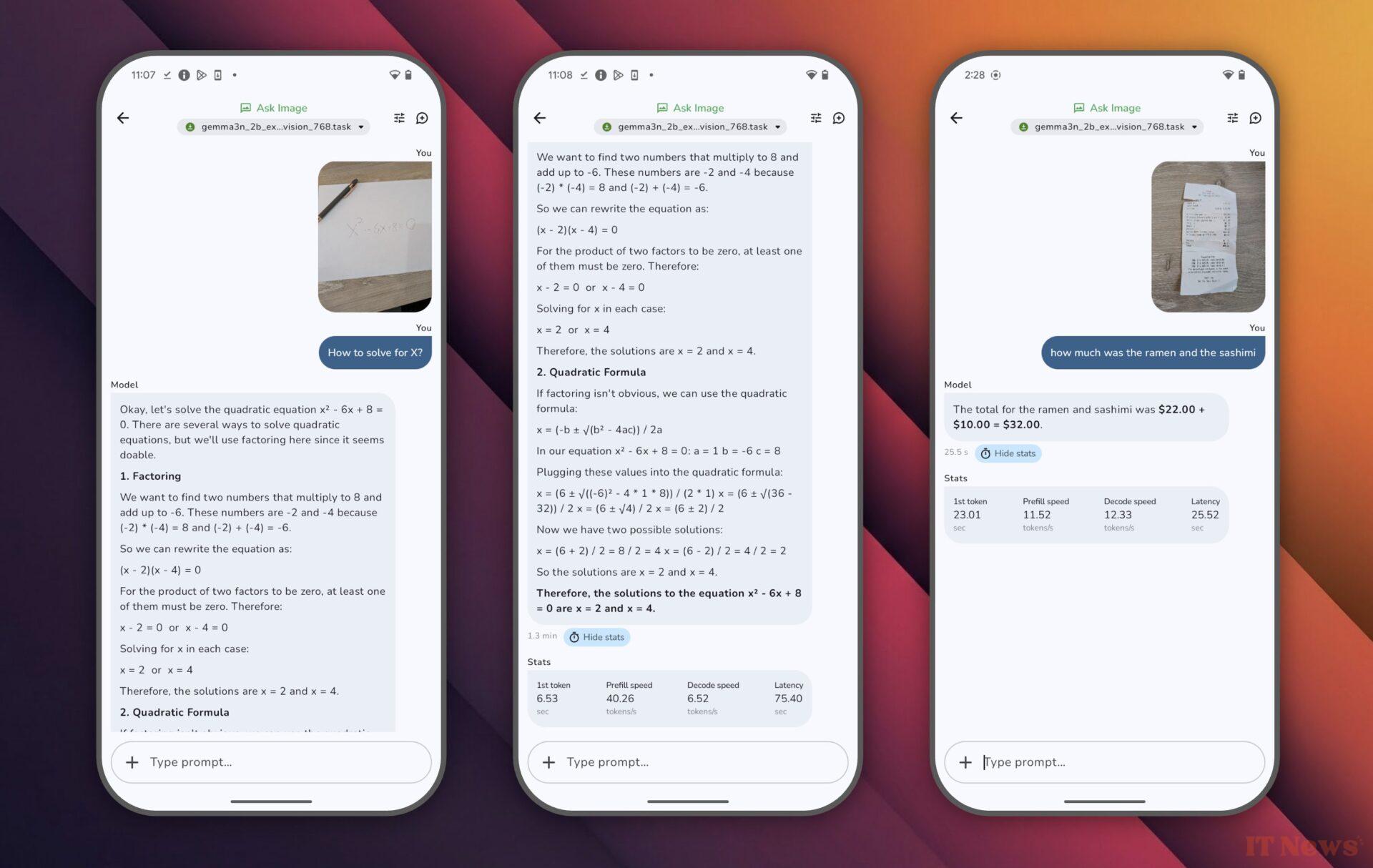

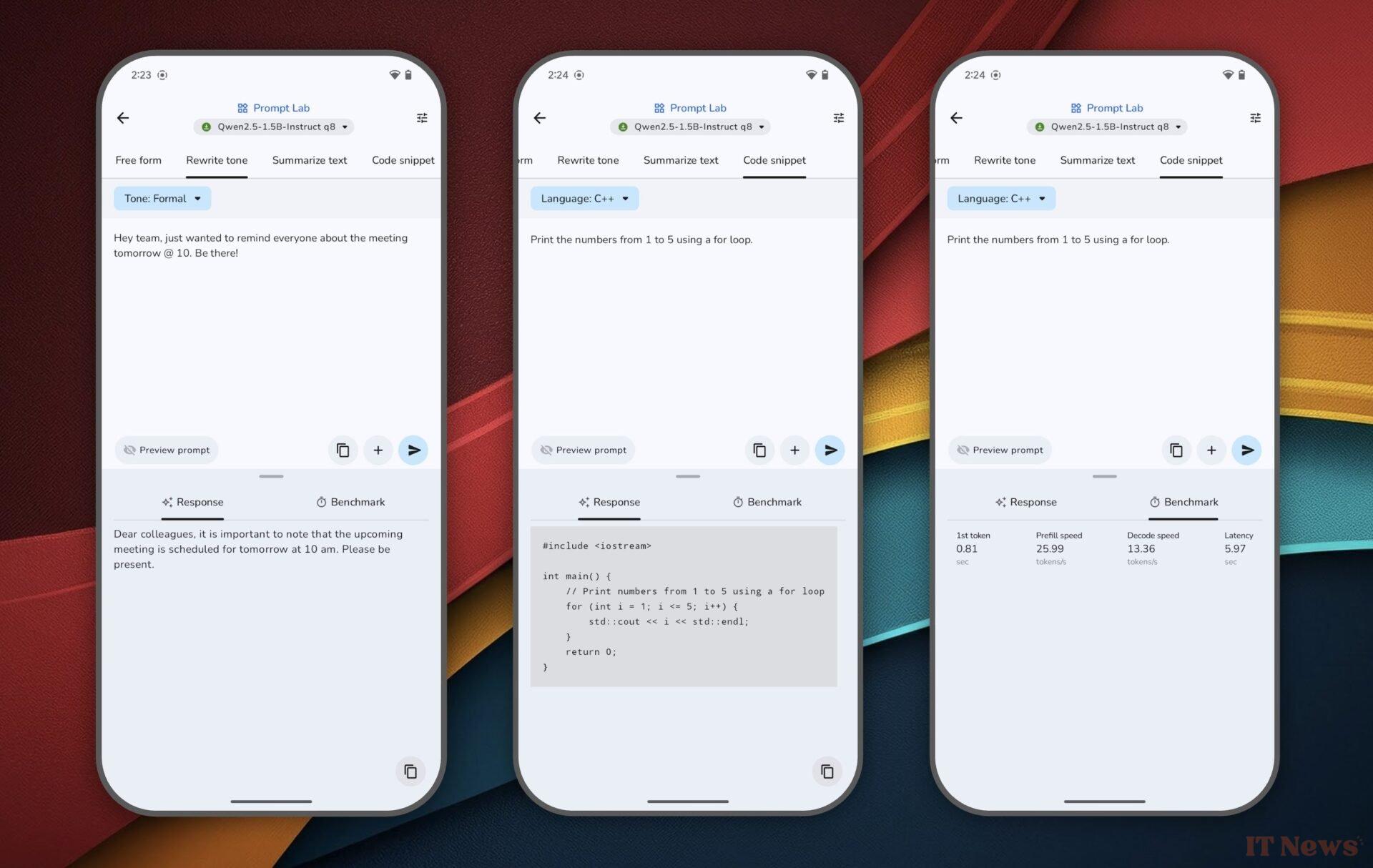

For now, the Google AI Edge Gallery allows you to switch between different Hugging Face language models. The application offers several use cases: "Ask image," "Prompt Lab," and "AI Chat." The former allows you to submit images to ask the various AIs to identify objects or solve certain problems. The latter allows you to summarize and rewrite, as well as generate code from a simple written request. Finally, the third allows you to have a conversation with the sculpin you have chosen.

By default, the application offers to download four language models locally (Gemma-3n-E2B-it-int4, Gemma-3n-E4B-it-int4, Gemma3-1B-IT q4, and QWEN2.5-1.5B-Instruct q8), but it is entirely possible to import new ones.

Limitations to be aware of

The application, which is completely free, is already available for Android, in the form of an APK application that must be manually installed on your smartphone. While no date has yet been confirmed, an iPhone version should be available very soon.

While using these language models locally on your device offers you greater privacy, there are certain things to consider. First of all, downloading these LLMs to your smartphone may take up a significant amount of storage, with some exceeding 4 GB. Furthermore, depending on your device's configuration, the performance obtained will be more or less good.

In any case, your requests will generally require more time to be processed locally on your smartphone than when transiting, for example, on the servers of the various platforms, such as ChatGPT and Gemini.

Source: Neowin

0 Comments