It's a reflex that a majority of users of ChatGPT and other similar generative artificial intelligences have quickly adopted: to be polite to the AI and thank it when it provides us with a satisfactory answer, as we would if it were a human being. But these hundreds of thousands of "please" and other "thank you" messages transmitted to a chatbot necessarily has an energy cost, and therefore a financial one.

Several million dollars to answer users' "thank yous"

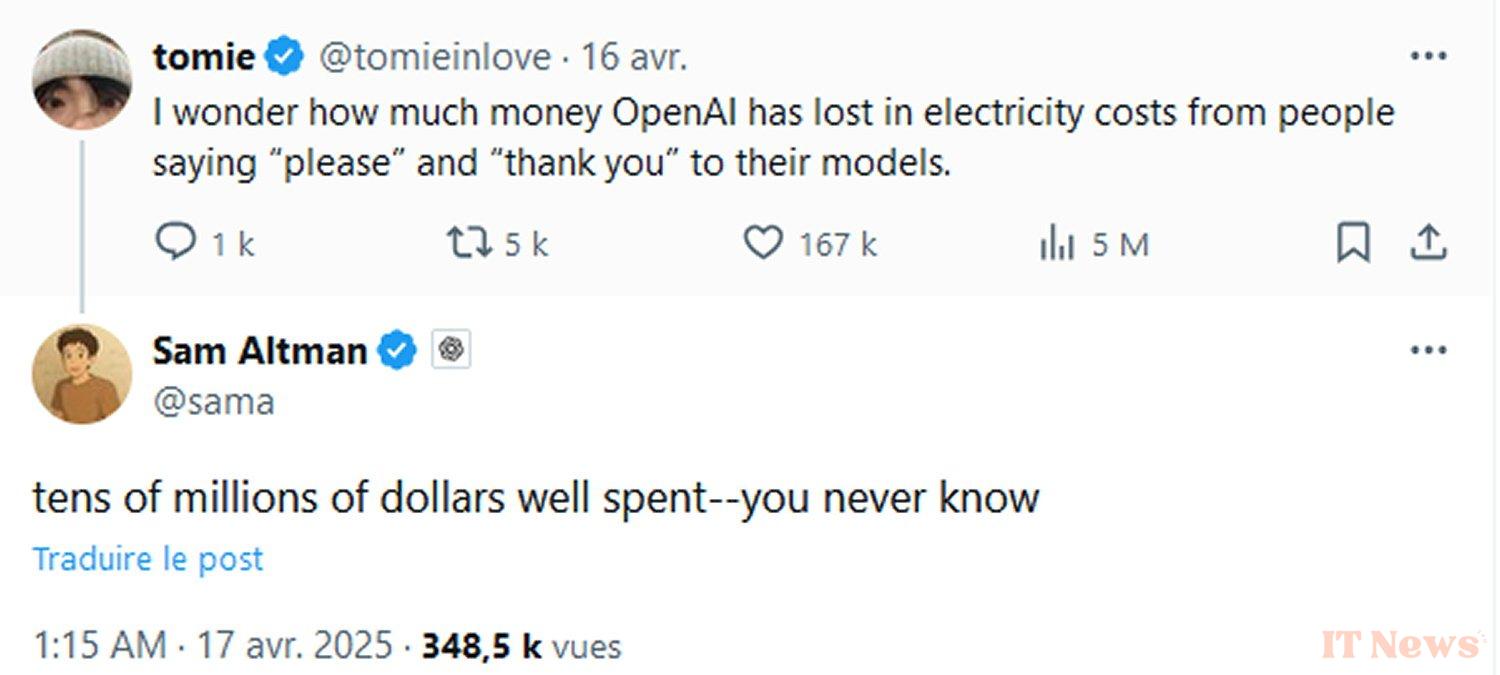

It was via a question asked on social network X that the subject reached Sam Altman (the CEO of OpenAI), who then responded in a humorous tone that being polite with ChatGPT cost his company tens of millions of dollars in electricity. We are talking here about isolated politeness formulas that expect a dedicated response from artificial intelligence, and not a "please" that would be slipped into at the end of a prompt, along with a specific question.

A response of about a hundred words from an advanced model Generative artificial intelligence requires, according to scientists at the University of California, around 140 Wh of energy, the equivalent of around fifteen LED bulbs lit for an hour. The electricity bill can therefore quickly explode with the millions of daily requests that pass through OpenAI's servers and infrastructure.

Does this mean we should change our habits and stop being polite with ChatGPT? Probably not: even if the chatbot explains, if asked specifically, that it does not expect its interlocutor to show deference, it would seem that blunt instructions and frank directives, without any sign of politeness, surprisingly lead to a less natural response from the AI. As for the more superstitious, they probably hope that the anthropomorphism they show towards artificial intelligences will be beneficial to them when they reach the technological singularity. Skynet, if you're reading this, thank you...

0 Comments